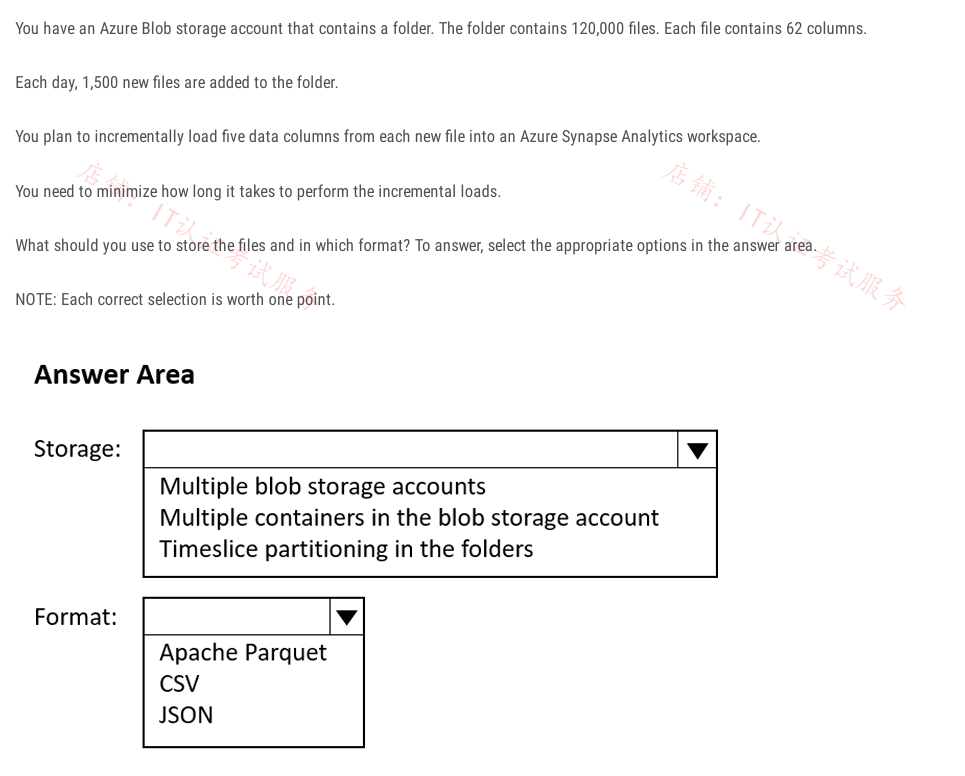

You have an Azure Blob storage account that contains a folder. The folder contains 120,000 files. Each file contains 62 columns. Each day, 1,500 new files are added to the folder.... You have an Azure Blob storage account that contains a folder. The folder contains 120,000 files. Each file contains 62 columns. Each day, 1,500 new files are added to the folder. You plan to incrementally load five data columns from each new file into an Azure Synapse Analytics workspace. You need to minimize how long it takes to perform the incremental loads. What should you use to store the files and in which format? Answer the appropriate options in the answer area.

Understand the Problem

The question is asking how to optimally store and format data in Azure Blob storage, focusing on minimizing load times for Azure Synapse Analytics. It requires selecting the best storage method and file format from given options.

Answer

Timeslice partitioning in the folders; Apache Parquet.

The storage option should be 'Timeslice partitioning in the folders' and the file format should be 'Apache Parquet'.

Answer for screen readers

The storage option should be 'Timeslice partitioning in the folders' and the file format should be 'Apache Parquet'.

More Information

Timeslice partitioning helps organize and retrieve files efficiently, while Apache Parquet is optimized for performance and storage, making it suitable for incremental loads.

Tips

Avoid using JSON or CSV if performance and loading time are critical, as these formats might be less efficient for querying large datasets.

Sources

- Microsoft DP-203 Exam Actual Questions (P. 22) - ExamTopics - examtopics.com

AI-generated content may contain errors. Please verify critical information