Podcast

Questions and Answers

What is the main difference between classification and clustering?

What is the main difference between classification and clustering?

- Classification and clustering are the same processes applied in different contexts.

- Classification deals with unknown categories while clustering deals with known categories.

- Classification is a type of unsupervised learning while clustering is supervised learning.

- Classification involves identifying known categories, whereas clustering categorizes data into unknown groups. (correct)

What type of learning does classification utilize?

What type of learning does classification utilize?

- Supervised learning (correct)

- Reinforcement learning

- Semi-supervised learning

- Unsupervised learning

What is a key purpose of clustering in data analysis?

What is a key purpose of clustering in data analysis?

- To predict a specific variable based on others.

- To group similar data points together based on a similarity measure. (correct)

- To create a model for known attributes.

- To learn dependency rules between items.

Which of the following describes regression analysis?

Which of the following describes regression analysis?

In the context of linear classifiers, what does the function f(x,w,b) represent?

In the context of linear classifiers, what does the function f(x,w,b) represent?

What does the formula for $Xs$ represent in the context of performance metrics?

What does the formula for $Xs$ represent in the context of performance metrics?

In k-folds cross-validation, how many times is the training process repeated?

In k-folds cross-validation, how many times is the training process repeated?

Which method involves leaving out one sample for testing while training on all others?

Which method involves leaving out one sample for testing while training on all others?

What is the purpose of calculating error probability in cross-validation methods?

What is the purpose of calculating error probability in cross-validation methods?

What is the significance of using k=1 in leave-one-out cross-validation?

What is the significance of using k=1 in leave-one-out cross-validation?

What is the primary rationale for using ensemble learning?

What is the primary rationale for using ensemble learning?

In the k-means algorithm, what step follows the assignment of objects to their nearest cluster centers?

In the k-means algorithm, what step follows the assignment of objects to their nearest cluster centers?

What characteristic defines partitional clustering algorithms?

What characteristic defines partitional clustering algorithms?

What defines the voting mechanism in ensemble learning?

What defines the voting mechanism in ensemble learning?

Which of the following is NOT a type of clustering algorithm mentioned?

Which of the following is NOT a type of clustering algorithm mentioned?

What is the class assigned when b is greater than 70 and w x + b50 is true?

What is the class assigned when b is greater than 70 and w x + b50 is true?

According to the given conditions, what class is assigned if a is 45 and c is 76?

According to the given conditions, what class is assigned if a is 45 and c is 76?

In the KNN Regression example, which age corresponds to the highest house price?

In the KNN Regression example, which age corresponds to the highest house price?

What is the formula for calculating the distance D in the KNN Regression?

What is the formula for calculating the distance D in the KNN Regression?

If the age is standardized to 0.375 and the house price index is 256, what is the associated distance value?

If the age is standardized to 0.375 and the house price index is 256, what is the associated distance value?

In the KNN Regression, if k=1, how is the house price for the query point determined?

In the KNN Regression, if k=1, how is the house price for the query point determined?

What can be concluded about the class assigned to an individual with a = 66, b = 59, and c = 76?

What can be concluded about the class assigned to an individual with a = 66, b = 59, and c = 76?

Which of the following distances corresponds to an age of 52?

Which of the following distances corresponds to an age of 52?

What is the primary distance metric used in K-means clustering?

What is the primary distance metric used in K-means clustering?

What is the time complexity of the K-means clustering algorithm?

What is the time complexity of the K-means clustering algorithm?

How many partitions must K be in the K-means clustering algorithm?

How many partitions must K be in the K-means clustering algorithm?

In the objective function of K-means, what does d(xj, zi) represent?

In the objective function of K-means, what does d(xj, zi) represent?

What does the variable wij signify in the K-means objective function?

What does the variable wij signify in the K-means objective function?

What will happen if you select k equal to n in K-means clustering?

What will happen if you select k equal to n in K-means clustering?

Which of the following is a weakness of the K-means clustering method?

Which of the following is a weakness of the K-means clustering method?

At which step do the cluster centers get updated in the K-means algorithm?

At which step do the cluster centers get updated in the K-means algorithm?

Why is it important to use the Euclidean distance in K-means clustering?

Why is it important to use the Euclidean distance in K-means clustering?

In which cluster assignment step do you expect the algorithm to converge?

In which cluster assignment step do you expect the algorithm to converge?

Flashcards

Classification

Classification

A pattern recognition task where the goal is to find a model that predicts the value of a target attribute (the "class") based on other attributes in a dataset.

Clustering

Clustering

A pattern recognition task where the goal is to group data points into clusters based on their similarity. Data points within the same cluster are more similar to each other than data points from different clusters.

Linear Classifier

Linear Classifier

A classification technique that uses a straight line (or a hyperplane in higher dimensions) to separate data points into different classes.

Supervised Classification

Supervised Classification

Signup and view all the flashcards

Unsupervised Classification

Unsupervised Classification

Signup and view all the flashcards

Root Mean Square Error (RMSE)

Root Mean Square Error (RMSE)

Signup and view all the flashcards

Relative Absolute Error (RAE)

Relative Absolute Error (RAE)

Signup and view all the flashcards

Root Relative Squared Error (RRSE)

Root Relative Squared Error (RRSE)

Signup and view all the flashcards

K-folds Cross-validation

K-folds Cross-validation

Signup and view all the flashcards

Leave-one-out Method

Leave-one-out Method

Signup and view all the flashcards

K-Nearest Neighbors (KNN) Classification

K-Nearest Neighbors (KNN) Classification

Signup and view all the flashcards

K-Nearest Neighbors (KNN) Regression

K-Nearest Neighbors (KNN) Regression

Signup and view all the flashcards

Distance Metric

Distance Metric

Signup and view all the flashcards

Standardized Distance

Standardized Distance

Signup and view all the flashcards

K Value

K Value

Signup and view all the flashcards

Target Variable

Target Variable

Signup and view all the flashcards

Features

Features

Signup and view all the flashcards

Ensemble Learning

Ensemble Learning

Signup and view all the flashcards

Diversity in Ensemble Learning

Diversity in Ensemble Learning

Signup and view all the flashcards

Voting in Ensemble Learning

Voting in Ensemble Learning

Signup and view all the flashcards

Hierarchical Clustering

Hierarchical Clustering

Signup and view all the flashcards

Partitional Clustering

Partitional Clustering

Signup and view all the flashcards

K-means Clustering: Step 1

K-means Clustering: Step 1

Signup and view all the flashcards

K-means Clustering: Step 2

K-means Clustering: Step 2

Signup and view all the flashcards

K-means Clustering: Step 3

K-means Clustering: Step 3

Signup and view all the flashcards

Objective Function

Objective Function

Signup and view all the flashcards

K-means Clustering: Step 4

K-means Clustering: Step 4

Signup and view all the flashcards

K-means Clustering: Step 5

K-means Clustering: Step 5

Signup and view all the flashcards

K-means Efficiency

K-means Efficiency

Signup and view all the flashcards

Euclidean Distance

Euclidean Distance

Signup and view all the flashcards

K-means Applications

K-means Applications

Signup and view all the flashcards

Study Notes

Data Science Tools and Software

- This is a presentation title slide, and likely part of a larger data science course.

- It is about classification and regression tools in data science.

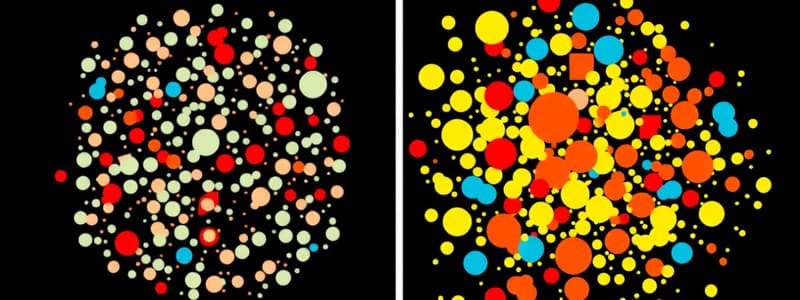

Classification vs Clustering

- Classification involves identifying known categories, such as recognizing patterns in data.

- Unsupervised learning distinguishes between classification and clustering—clustering involves working with unknown categories.

Pattern Recognition Tasks

- The first task, classification, requires finding a model within a provided dataset to categorize data points.

- Clustering groups data points based on similarity to other data points. Data points within a cluster are similar, while points in separate clusters are dissimilar.

- Association rule discovery is another related task that finds which combined items tend to occur together.

Pattern Recognition Applications

- This section details specific applications of pattern recognition in various domains such as Document image analysis, optical character recognition, document classification, internet search, and more.

Linear Classifiers

- Linear classifiers separate data points based on a linear equation (w x + b=0, where 'w' and 'b' are learned parameters.)

- The equation determines which side of the line each point belongs to (+ / -).

- Learners need to find the best linear equation to classify data points.

- Margin is the width to grow the dividing line without hitting any data point.

Maximum Margin

- Maximizing the margin is an important concept in Support Vector Machines that maximizes the separation line by using only the points which are most difficult to separate, also referred to as support vectors.

- Other points don't affect the separating line.

How to do multi-class classification with SVM

- One-to-rest approach creates separate SVM classifiers to classify a single class against the rest of the classes, with the highest score indicating the output/prediction.

- One-to-one approach compares each pair of classification classes to form a decision boundary.

K-Nearest Neighbor (KNN)

- KNN compares new instances to existing points based on the feature vector distance, and decides based on the class label of its k-nearest neighbors with respect to the new point of interest.

- Distance can be Euclidean.

KNN Classification

- A graph example showing Loan amount vs Age and the classification of whether a person is likely to repay their loan, and whether the classification is correct or not.

KNN Classification - Standardized Distance

- Standardized variables are compared to find the nearest neighbor

- Formula to calculate Standardized Variable displayed.

Distance Weighted KNN

- A refinement to KNN that assigns weights to neighboring points based on their distance from the query point. The closer the points, the higher their weight.

- The weights decrease as the distance increases between both points.

KNN Summary

- KNN is efficient for data with fewer features and large datasets.

- It is slow with large datasets and many features, but can work well with irregular shaped target classes, which are not easily distinguished by linear methods.

How to choose K

- The optimal 'k' value depends on available data. More 'k' values improves accuracy, but more 'k' increases processing time.

Performance Metrics of Classification

- Error Rate, Accuracy, Sensitivity, Specificity, Precision, Recall, and F-Measure provide different ways to evaluate the performance of classifiers. These metrics calculate the proportion of successful predictions vs. errors based on different parameters.

Example

- Examples of how to calculate performance metrics using provided datasets and algorithms.

KNN Regression Example – (k=1)

- An example using KNN for regression that finds the house price index based on age and loan amount.

KNN Regression - Standardized Distance

- The process of standardizing variables by calculating the standardized variable's value, which is useful for comparing different data points with different scales.

Performance Metrics of Regression

- Root Mean Square Error (RMSE) and Relative Absolute Error (RAE) and Root Relative Squared Error (RRSE) are metrics to measure the error rate between predicted values and actual values during regression analysis.

K-folds cross-validation

- A technique to evaluate a machine learning model by dividing the dataset into k folds, then training and testing the model k times, each time using a different fold as the test set.

Leave-one-out Method

- A specific type of k-fold cross-validation where k = N, and each data point is used once as the test set. This commonly results in the most accurate model during testing..

Cross validation Example

- This section demonstrates calculating accuracy of a model using leave-one-out cross validation.

Rationale for Ensemble Learning

- There's no single algorithm that consistently delivers superior accuracy.

- Combining algorithms that use different attributes, parameters, or even small sample sets to improve the accuracy of models improves results.

Voting

- Voting is an ensemble method that combines the predictions of multiple base learners.

Clustering algorithms

- Clustering algorithms group similar data points. The methods are either hierarchical or partitional.

Algorithm k-means

- K-means is a partitional clustering algorithm to group similar data points into k clusters.

K-means Clustering: Step 1- Step 5

- Steps of the k-means algorithm in the context of data clustering. Illustrations of points moving towards centroid values.

Objective function

- Mathematical representation of the k-means objective function used for minimizing distance between data points from their assigned cluster's centroid.

Comments on the K-Means Method

- K-means is relatively efficient with good performance in finding clusters.

- It can have problems finding the global optimum and requires knowing k in advance. The method isn't good for handling various data types/distributions.

How can we tell the right number of clusters?

- Approximate methods are used to estimate the optimal number of clusters. This includes plotting and evaluating the objective function with respect to k to find the optimal k value.

Clustering Method Examples

- Several techniques/methods for evaluating clustering results.

Database/Python Example

- Python examples with K-means clustering, including how to perform clustering on data with datasets and functions for calculating the Davies-Bouldin Index.

Hierarchical Clustering : Agglomerative

- A hierarchical clustering approach that starts by treating each data point as a separate cluster. Clusters are merged to form larger clusters until all points are combined into a single cluster.

Intermediate State

- Intermediate state of algorithm in the process of clustering.

After Merging

- The process of updating the distance matrix

Distance between two clusters

- How to calculate distance between two clusters based on the similarity between most similar points in both clusters.

Single-link clustering: example

- An example of how to perform single link clustering on data using proximity graphs and evaluating clustering similarities.

Important Python Functions

- Python functions for hierarchical clustering using the

AgglomerativeClusteringclass.

Quiz

- Questions about the various clustering algorithms displayed for practical application in data science.

Density-Based Clustering Methods

- Clustering methods that group data points based on density. It handles data in arbitrary shapes and is robust to noise; but has some limitations; the density needs to be predefined.

Density-Based Clustering: Basic Concepts

- Basic concepts and parameters of density-based clustering methods.

Density-Reachable and Density-Connected

- Relations between data points which are directly or indirectly related to each other based on their mutual density.

DBSCAN: The Algorithm

- A step-by-step explanation of the DBSCAN clustering algorithm.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.

Related Documents

Description

This quiz tests your understanding of key concepts in machine learning, focusing on classification and clustering techniques. You'll explore differences between the two, the purposes of regression analysis, and performance metrics in data analysis. Assess your knowledge of advanced methods like k-folds and ensemble learning.