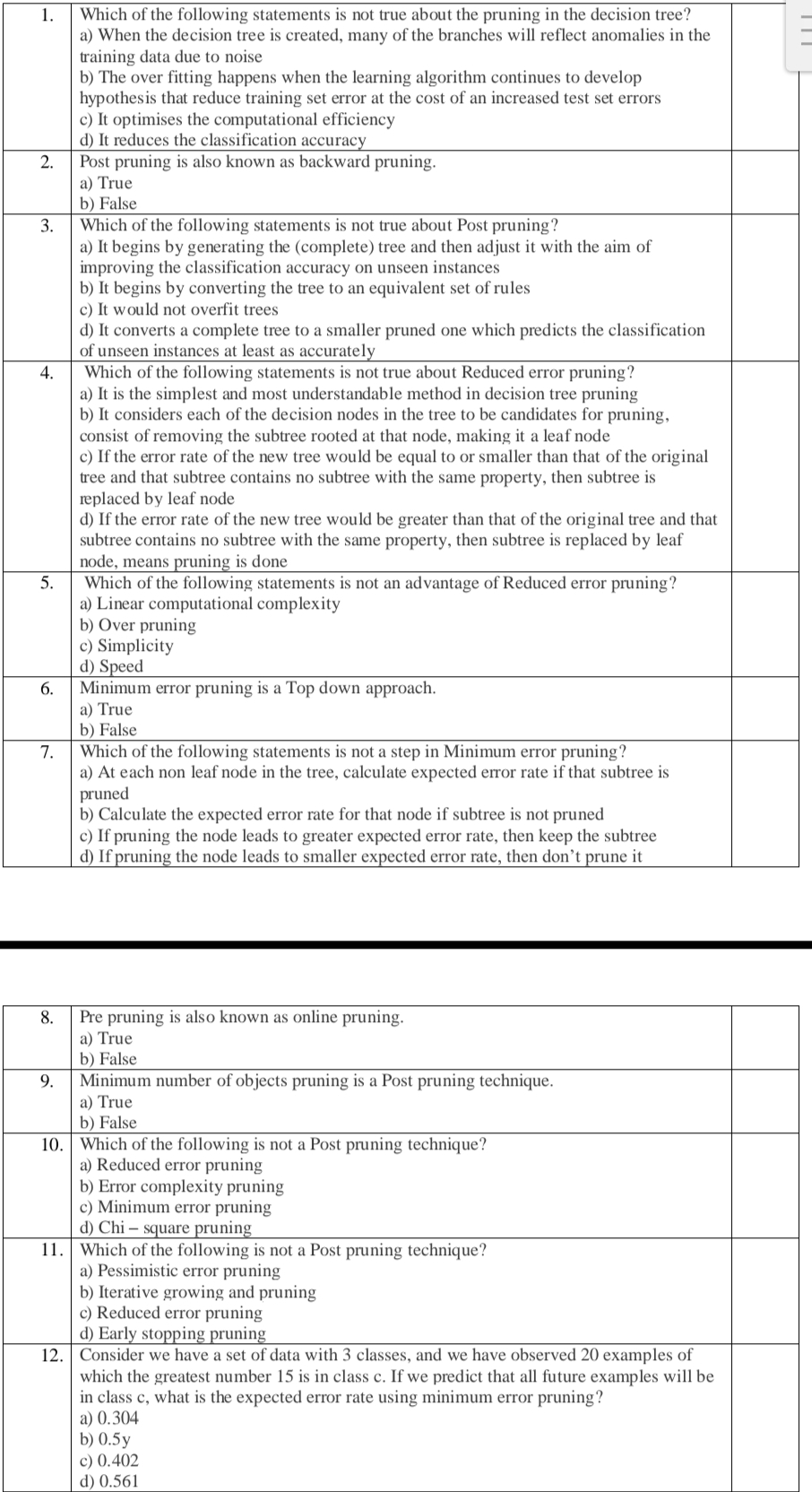

Which of the following statements is not true about pruning in the decision tree? a) When the decision tree is created, many of the branches will reflect anomalies in the training... Which of the following statements is not true about pruning in the decision tree? a) When the decision tree is created, many of the branches will reflect anomalies in the training data due to noise b) The overfitting happens when the learning algorithm continues to develop hypotheses that reduce training set error at the cost of an increased test set errors c) It optimises the computational efficiency d) It reduces the classification accuracy.

Understand the Problem

The question is asking about various aspects of decision tree pruning methods, including their definitions and characteristics. It covers distinguishing true statements from false ones regarding post pruning, reduced error pruning, and minimum error pruning, as well as a specific calculation about expected error rates in a given situation.

Answer

d) It reduces the classification accuracy.

The final answer is: d) It reduces the classification accuracy.

Answer for screen readers

The final answer is: d) It reduces the classification accuracy.

More Information

Pruning helps improve decision tree performance by removing parts that do not provide power to classify instances accurately. This reduces the complexity of the model and avoids overfitting.

Tips

A common mistake is to assume that pruning always reduces accuracy, but it helps prevent overfitting and thus can enhance performance on unseen data.

Sources

AI-generated content may contain errors. Please verify critical information