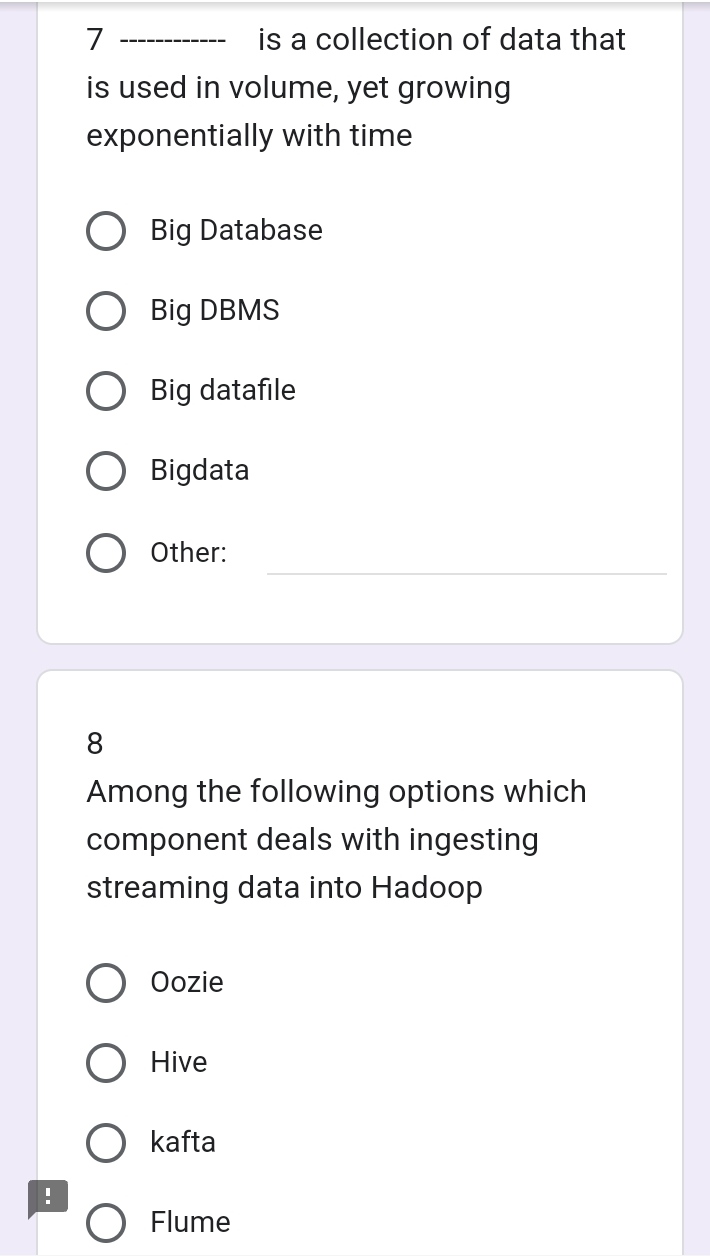

What is a collection of data that is used in volume, yet growing exponentially with time? Among the following options which component deals with ingesting streaming data into Hadoo... What is a collection of data that is used in volume, yet growing exponentially with time? Among the following options which component deals with ingesting streaming data into Hadoop?

Understand the Problem

The questions are focused on data management concepts and tools. The first question asks to identify a term that describes a large collection of data that increases over time, while the second question seeks to identify a component that handles streaming data in the Hadoop ecosystem.

Answer

7) Bigdata; 8) Flume

The final answer is: 7) Bigdata; 8) Flume

Answer for screen readers

The final answer is: 7) Bigdata; 8) Flume

More Information

Big Data refers to data sets that are large and complex, while Flume is used for efficiently collecting, aggregating, and moving large amounts of log data into Hadoop.

Tips

A common mistake is confusing Flume with Kafka; while both handle data streaming, Flume is specifically designed for Hadoop.

Sources

- Top 50 Big Data MCQs - InterviewBit - interviewbit.com

- Big Data Analytics MCQs What are the main components ... - Studocu - studocu.com

AI-generated content may contain errors. Please verify critical information