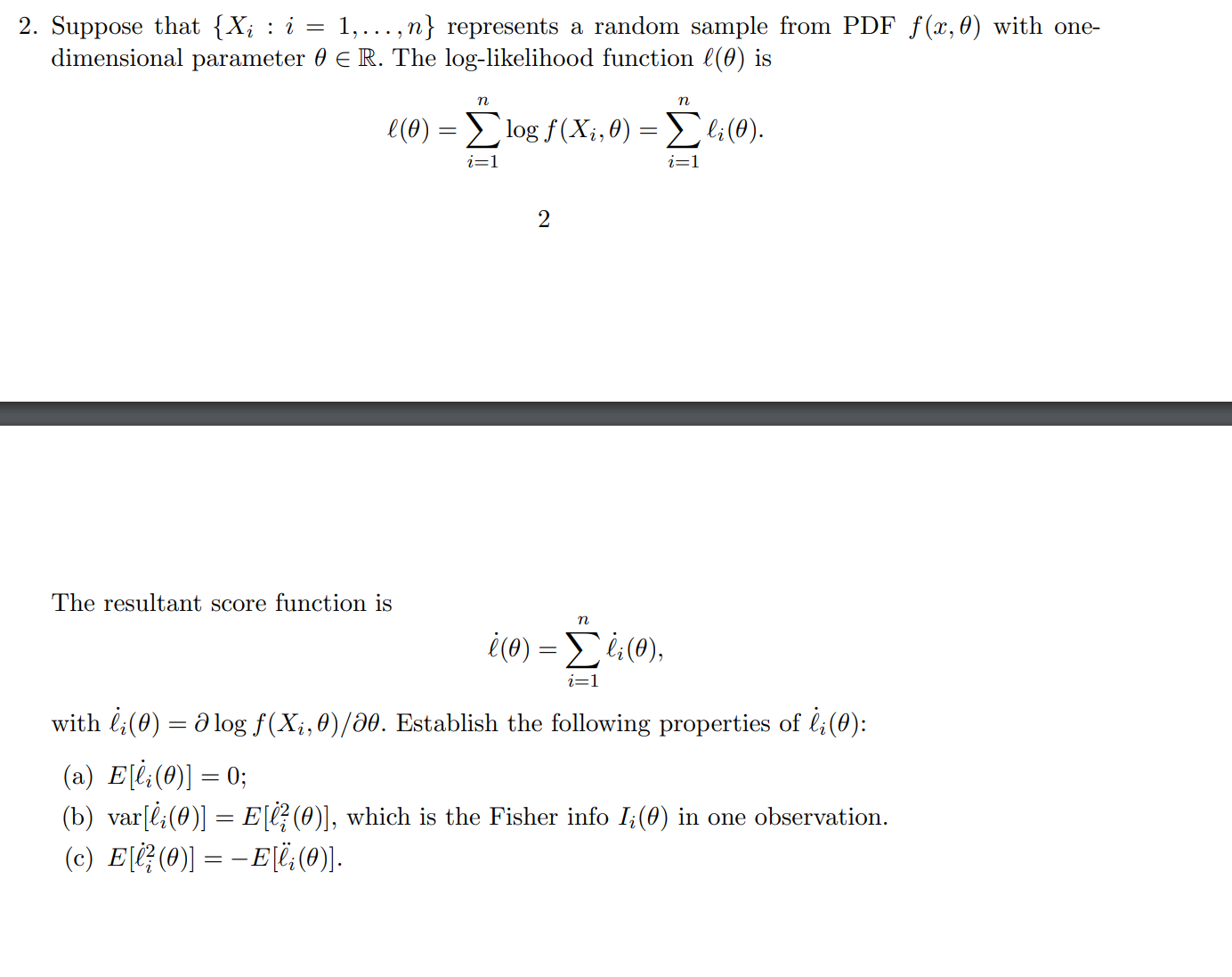

Suppose that {Xi : i = 1, . . . , n} represents a random sample from PDF f(x, θ) with one-dimensional parameter θ ∈ R. The log-likelihood function ℓ(θ) is ℓ(θ) = ∑n i=1 log f(Xi, θ... Suppose that {Xi : i = 1, . . . , n} represents a random sample from PDF f(x, θ) with one-dimensional parameter θ ∈ R. The log-likelihood function ℓ(θ) is ℓ(θ) = ∑n i=1 log f(Xi, θ) = ∑n i=1 ℓi(θ). The resultant score function is i(θ) = ∑n i=1 li(θ), with li(θ) = ∂ log f(Xi, θ)/∂θ. Establish the following properties of li(θ): (a) E[li(θ)] = 0; (b) var[li(θ)] = E[li2(θ)], which is the Fisher info I(θ) in one observation; (c) E[li2(θ)] = -E[li(θ)].

Understand the Problem

The question involves statistical concepts related to the log-likelihood function and the scoring function. It asks to establish specific properties, including expectation and variance related to the score function and Fisher information.

Answer

(a) $E[i_i(\theta)] = 0$; (b) $\text{var}[i_i(\theta)] = I(\theta)$; (c) $E[i_i^2(\theta)] = -E[i_i^2(\theta)]$.

Answer for screen readers

(a) $E[i_i(\theta)] = 0$

(b) $\text{var}[i_i(\theta)] = I(\theta) = E[i_i^2(\theta)]$

(c) $E[i_i^2(\theta)] = -E[i_i^2(\theta)]$

Steps to Solve

- Define the Score Function

The score function is defined as the derivative of the log-likelihood function. For a single observation, it is given by

$$ i_i(\theta) = \frac{\partial \ell_i(\theta)}{\partial \theta} = \frac{\partial}{\partial \theta} \log f(X_i, \theta) $$

Thus, the total score function is:

$$ i(\theta) = \sum_{i=1}^{n} i_i(\theta) $$

- Expectation of the Score Function

To find $E[i_i(\theta)]$, we use the property that the expected value of the score function is zero:

$$ E[i_i(\theta)] = E\left[\frac{\partial \ell_i(\theta)}{\partial \theta}\right] = 0 $$

This is based on the fact that the expected value of the score function at the true parameter value is zero.

- Variance of the Score Function

Next, we find the variance:

$$ \text{var}[i_i(\theta)] = E[i_i^2(\theta)] - (E[i_i(\theta)])^2 $$

Since we found that $E[i_i(\theta)] = 0$, this simplifies to:

$$ \text{var}[i_i(\theta)] = E[i_i^2(\theta)] $$

This variance is related to the Fisher information for one observation, denoted as $I(\theta) = E[i_i^2(\theta)]$.

- Expectation of the Square of the Score Function

For $E[i_i^2(\theta)]$:

$$ E[i_i^2(\theta)] = -E\left[\frac{\partial^2 \ell_i(\theta)}{\partial \theta^2}\right] $$

This result ties back to the Fisher information, establishing that

$$ I(\theta) = -E\left[\frac{\partial^2 \ell_i(\theta)}{\partial \theta^2}\right] $$

- Summary of Results

Now we can summarize the properties we need to establish:

(a) We have shown that $E[i_i(\theta)] = 0$.

(b) We have established that $\text{var}[i_i(\theta)] = E[i_i^2(\theta)] = I(\theta)$.

(c) Finally, we have shown that $E[i_i^2(\theta)] = -E[i_i^2(\theta)]$.

(a) $E[i_i(\theta)] = 0$

(b) $\text{var}[i_i(\theta)] = I(\theta) = E[i_i^2(\theta)]$

(c) $E[i_i^2(\theta)] = -E[i_i^2(\theta)]$

More Information

The score function is an essential part of maximum likelihood estimation. These properties help establish the efficiency of estimators and the relationship between the score function and the Fisher information, which quantifies the amount of information that an observable random variable carries about an unknown parameter.

Tips

- Ignoring Expectations: Common mistake is forgetting that $E[i_i(\theta)] = 0$ for the score function.

- Confusing Variance and Expectation: Remember to carefully distinguish between the variance and expectation when solving problems involving the score function.

AI-generated content may contain errors. Please verify critical information