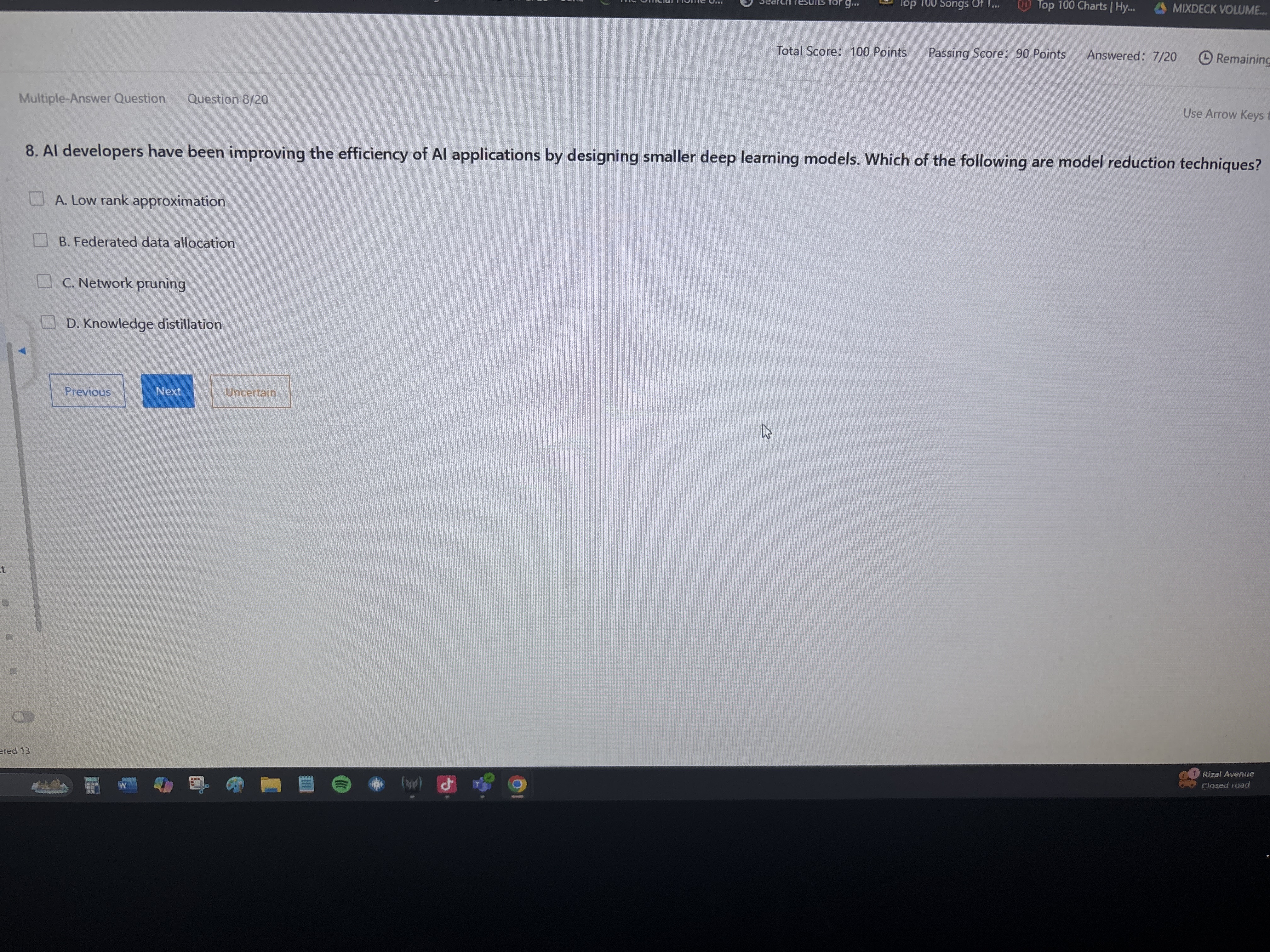

AI developers have been improving the efficiency of AI applications by designing smaller deep learning models. Which of the following are model reduction techniques?

Understand the Problem

The question is asking which of the provided options are techniques for model reduction in AI applications that involve designing smaller deep learning models. This involves understanding various practices in machine learning and deep learning optimization.

Answer

A. Low rank approximation, C. Network pruning, D. Knowledge distillation

The correct model reduction techniques include A. Low rank approximation, C. Network pruning, and D. Knowledge distillation.

Answer for screen readers

The correct model reduction techniques include A. Low rank approximation, C. Network pruning, and D. Knowledge distillation.

More Information

Low rank approximation reduces model complexity by approximating weight matrices with lower dimensional ones. Network pruning removes insignificant parameters for efficiency. Knowledge distillation transfers knowledge from a larger model to a smaller one, maintaining performance with reduced size.

Tips

Common mistake is thinking Federated data allocation is a model reduction technique, while it actually relates to data processing and model training across decentralized devices.

Sources

- 8 Al developers have been improving the - StudyX.AI - studyx.ai

- Deep Learning Model Optimization Methods - Neptune.ai - neptune.ai

AI-generated content may contain errors. Please verify critical information