Podcast

Questions and Answers

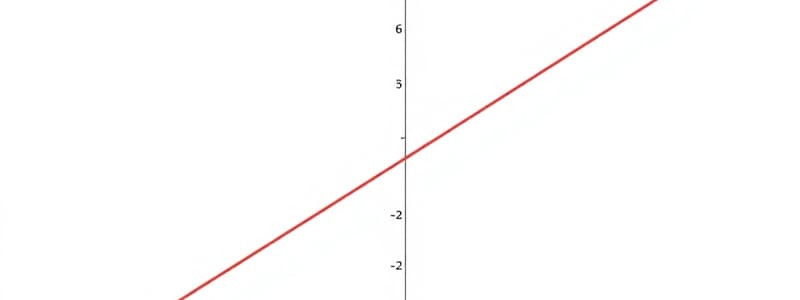

The estimated slope coefficient in a simple linear regression is:

The estimated slope coefficient in a simple linear regression is:

- the ratio of the covariance of the regression variables to the variance of the independent variable. (correct)

- the change in the independent variable, given a one-unit change in the dependent variable.

- the predicted value of the dependent variable, given the actual value of the independent variable.

Given the relationship: Y = 2.83 + 1.5X. What is the predicted value of the dependent variable when the value of the independent variable equals 2?

Given the relationship: Y = 2.83 + 1.5X. What is the predicted value of the dependent variable when the value of the independent variable equals 2?

- 2.83.

- –0.55.

- 5.83. (correct)

When there is a linear relationship between an independent variable and the relative change in the dependent variable, the most appropriate model for a simple regression is:

When there is a linear relationship between an independent variable and the relative change in the dependent variable, the most appropriate model for a simple regression is:

- the lin-log model.

- the log-log model. (correct)

- the log-lin model.

The R2 for this regression is closest to:

The R2 for this regression is closest to:

The coefficient of determination for a linear regression is best described as the:

The coefficient of determination for a linear regression is best described as the:

A simple linear regression is said to exhibit heteroskedasticity if its residual term:

A simple linear regression is said to exhibit heteroskedasticity if its residual term:

To determine a confidence interval around the predicted value from a simple linear regression, the appropriate degrees of freedom are:

To determine a confidence interval around the predicted value from a simple linear regression, the appropriate degrees of freedom are:

Which of the following is least likely an assumption of linear regression?

Which of the following is least likely an assumption of linear regression?

A simple linear regression is a model of the relationship between:

A simple linear regression is a model of the relationship between:

The F-statistic for the test of the fit of the model is closest to:

The F-statistic for the test of the fit of the model is closest to:

To account for logarithmic variables, functional forms of simple linear regressions are available if:

To account for logarithmic variables, functional forms of simple linear regressions are available if:

The strength of the relationship, as measured by the correlation coefficient, between the return on mid-cap stocks and the return on the S&P 500 for the period under study was:

The strength of the relationship, as measured by the correlation coefficient, between the return on mid-cap stocks and the return on the S&P 500 for the period under study was:

In a simple regression model, the least squares criterion is to minimize the sum of squared differences between:

In a simple regression model, the least squares criterion is to minimize the sum of squared differences between:

Flashcards

Slope coefficient in linear regression

Slope coefficient in linear regression

The change in the dependent variable, given a one-unit change in the independent variable.

Heteroskedasticity

Heteroskedasticity

Condition where the variance of the residual term of a regression is not constant across all observations.

Simple linear regression

Simple linear regression

A model of the relationship between one dependent variable and one independent variable.

Least squares criterion

Least squares criterion

Signup and view all the flashcards

Confidence interval degrees of freedom

Confidence interval degrees of freedom

Signup and view all the flashcards

Assumption of linear regression

Assumption of linear regression

Signup and view all the flashcards

Coefficient of determination

Coefficient of determination

Signup and view all the flashcards

Log-lin model

Log-lin model

Signup and view all the flashcards

R-squared equation

R-squared equation

Signup and view all the flashcards

Logarithmic variables

Logarithmic variables

Signup and view all the flashcards

Correlation coefficient (r)

Correlation coefficient (r)

Signup and view all the flashcards

F-statistic equation

F-statistic equation

Signup and view all the flashcards

Study Notes

- The estimated slope coefficient in simple linear regression represents the change in the dependent variable for a one-unit change in the independent variable.

- Formula for the estimated slope coefficient is Cov(X,Y) / σX^2, where Y is the dependent variable and X is the independent variable.

- To predict the value of the dependent variable (Y) given an independent variable (X) use the formula Y = 2.83 + 1.5X.

- When a linear relationship exists between an independent variable and the relative change in the dependent variable, a lin-log model Ina regression of the form In Y = bo + b₁X is appropriate.

- R² is calculated as the sum of squares regression divided by the sum of squares total.

- R² = sum of squares regression / sum of squares total = 556 / 1,235 = 0.45.

Coefficient of Determination

- The coefficient of determination for a linear regression describes the percentage of variation in the dependent variable explained by the variation of the independent variable.

Heteroskedasticity

- Heteroskedasticity occurs when the variance of the residual term in a regression is not constant across all observations.

Confidence Interval

- To determine a confidence interval around a predicted value from a simple linear regression, the degrees of freedom are n - 2.

Linear Regression Assumptions

- A crucial assumption in linear regression is that error terms are independently distributed, implying correlations between them should be zero.

- Constant variance of error terms and no correlation between the independent variable and the error term are typical assumptions.

Simple vs. Multiple Linear Regression

- Simple linear regression models the relationship between one dependent and one independent variable.

- Multiple regression models the relationship between one dependent variable and more than one independent variable

ANOVA table

- F-statistic = sum of squares regression / mean squared error = 550 / 19.737 = 27.867

- The F-statistic tests the fit of the model.

Logarithmic Variables

- Functional forms of simple linear regressions are available to account for logarithmic variables if either or both the dependent and independent variables are logarithmic.

- A log-lin model is appropriate if the dependent variable demonstrates logarithmic behavior, while the independent variable is linear.

- A lin-log model is appropriate if the independent variable shows logarithmic behavior, while the dependent variable is linear.

- A log-log model is appropriate if both the independent and dependent variables are logarithmic.

Correlation Coefficient

- To measure the strength of the relationship, as measured by the correlation coefficient (r):

- r = √0.599 = 0.774

Least Squares Criterion

- The least squares criterion in a simple regression model minimizes the sum of squared differences between the predicted and actual values of the dependent variable.

- Serves as the squared vertical distances.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.