Podcast

Questions and Answers

The “Pr(>|t|)” is a p-value based on the following hypothesis: H0: the coefficient is not ______________________ → null hypothesis

The “Pr(>|t|)” is a p-value based on the following hypothesis: H0: the coefficient is not ______________________ → null hypothesis

significant

Geographically ______________________ regression (GWR): GWR evaluates a local regression model of the dependent variable by fitting a regression equation to every feature in the dataset.

Geographically ______________________ regression (GWR): GWR evaluates a local regression model of the dependent variable by fitting a regression equation to every feature in the dataset.

weighted

An alternative to OLS is ______________________ least squares, Some data points are given more weight when determining which line of best fit minimizes the residuals

An alternative to OLS is ______________________ least squares, Some data points are given more weight when determining which line of best fit minimizes the residuals

weighted

Changes in magnitude of parameter estimates across space can indicate locally changing influence of the independent (explanatory) variables on dependent (response) ______________________

Changes in magnitude of parameter estimates across space can indicate locally changing influence of the independent (explanatory) variables on dependent (response) ______________________

GWR yields spatially distributed regression coefficients data varies thru ______________________

GWR yields spatially distributed regression coefficients data varies thru ______________________

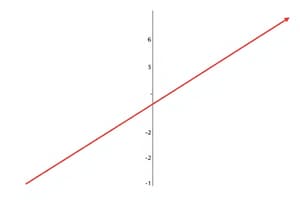

Simple linear regression describes relationship between a dependent variable (y-var) and one or more independent variables. The regression coefficients 𝛽0 and 𝛽1 are determined for all data in the dataset. 𝛽0 represents the y-intercept, while 𝛽1 represents the ______ of the line.

Simple linear regression describes relationship between a dependent variable (y-var) and one or more independent variables. The regression coefficients 𝛽0 and 𝛽1 are determined for all data in the dataset. 𝛽0 represents the y-intercept, while 𝛽1 represents the ______ of the line.

The error term 𝜀 in the simple linear regression equation accounts for the 'random' error or residuals, which determine how far off the data lies from the ______.

The error term 𝜀 in the simple linear regression equation accounts for the 'random' error or residuals, which determine how far off the data lies from the ______.

When calculating model residuals in simple linear regression, if y' is the predicted value of y for a given value of x, then the residual is the difference between y and y'. The residual can be either positive or ______.

When calculating model residuals in simple linear regression, if y' is the predicted value of y for a given value of x, then the residual is the difference between y and y'. The residual can be either positive or ______.

One of the assumptions of regression is that the errors have a normal distribution for each set of values of independent variables, ensuring that the errors are independent of one ______.

One of the assumptions of regression is that the errors have a normal distribution for each set of values of independent variables, ensuring that the errors are independent of one ______.

In regression, it is crucial for the errors to have an expected (mean) value of zero, meaning that in terms of residuals, the errors must have a mean value of ______.

In regression, it is crucial for the errors to have an expected (mean) value of zero, meaning that in terms of residuals, the errors must have a mean value of ______.

Flashcards are hidden until you start studying

Study Notes

Simple Linear Regression

- Describes the relationship between a dependent (response) variable (y-var) and one or more independent (explanatory) variables (x-var).

- Equation: 𝑦 = 𝛽0 + 𝛽1𝑥 + 𝜀

- 𝛽0: y-intercept, beta naught/zero

- 𝛽1: slope, beta one

- 𝜀: random error (residual), error term

Model Residuals

- Residual: difference between y and y’ (predicted value of y for a given value of x)

- Residuals can be positive or negative

- Goal: to minimize point to model

Assumptions of Regression

- Errors have a normal distribution for each set of values of independent variables

- Errors have an expected (mean) value of zero

- Variance of errors is constant for all values of independent variables

- Errors are independent of one another (hetero-steticity)

Multiple Linear Regression

- Makes predictions for a response variable given several explanatory variables

- Equation: 𝑦′ = 𝛽0 + 𝛽1𝑥1 + 𝛽2𝑥2 + ⋯ + 𝛽n𝑥n + 𝜀

- Multiple independent variables for a simple dependent variable

Ordinary and Weighted Least Squares

- Ordinary Least Squares (OLS): determines coefficients that minimize residuals overall

- Each data point is treated equally

- Weighted Least Squares (WLS): gives more weight to certain data points

- Geographically Weighted Regression (GWR): a special type of WLS, uses proximity to weight points

Geographically Weighted Regression (GWR)

- Evaluates a local regression model of the dependent variable by fitting a regression equation to every feature in the dataset

- Constructs separate equations by incorporating the response and explanatory variables of the features falling within a neighborhood (bandwidth) of each target feature

- Two important interpretations:

- Changes in magnitude of parameter estimates across space can indicate locally changing influence of the independent variables on dependent variable

- Changes of GWR results by bandwidth can indicate effect of spatial scale on relation between independent and dependent variables

Why Use GWR?

- Suitable for modeling non-stationary relations

- Can address questions like: Is the relationship between educational attainment and income consistent across the study area?

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.