Podcast

Questions and Answers

What is the purpose of the cost function J in linear regression?

What is the purpose of the cost function J in linear regression?

- To calculate the size of the house X

- To determine the slope of the straight line fit

- To visualize how changing theta 1 impacts the cost

- To find the best parameters theta 0 and theta 1 for the hypothesis function (correct)

How does setting theta 0 to 0 simplify the optimization problem for linear regression?

How does setting theta 0 to 0 simplify the optimization problem for linear regression?

- It eliminates the need for a cost function

- It makes it harder to fit a straight line

- It reduces the problem to optimizing theta 1 only (correct)

- It changes the hypothesis function

What does plotting the cost function J against different values of theta 1 help visualize?

What does plotting the cost function J against different values of theta 1 help visualize?

- The position of theta 0

- The size of the house X

- The accuracy of the hypothesis function

- How changing theta 1 impacts the cost (correct)

Why is minimizing J of theta 1 the objective of the learning algorithm in linear regression?

Why is minimizing J of theta 1 the objective of the learning algorithm in linear regression?

How do different values of theta 1 relate to the straight line fits in linear regression?

How do different values of theta 1 relate to the straight line fits in linear regression?

The cost function J is used as the optimization objective to find the best values for theta 0 and theta 2 in linear regression.

The cost function J is used as the optimization objective to find the best values for theta 0 and theta 2 in linear regression.

The hypothesis function H of X in linear regression is solely dependent on the parameter theta 1.

The hypothesis function H of X in linear regression is solely dependent on the parameter theta 1.

Each specific theta 1 value corresponds to a unique cost function J value in linear regression.

Each specific theta 1 value corresponds to a unique cost function J value in linear regression.

Minimizing the cost function J with respect to theta 1 is the primary goal of the learning algorithm in linear regression.

Minimizing the cost function J with respect to theta 1 is the primary goal of the learning algorithm in linear regression.

Plotting the cost function J against different values of theta 0 helps visualize how changing theta 0 impacts the cost in linear regression.

Plotting the cost function J against different values of theta 0 helps visualize how changing theta 0 impacts the cost in linear regression.

Study Notes

- The video discusses the concept of the cost function and its importance in fitting a straight line to data for linear regression.

- The cost function J is used as the optimization objective to find the best parameters theta 0 and theta 1 for the hypothesis function.

- A simplified hypothesis function is introduced where theta 0 is set to 0, reducing the problem to optimizing theta 1 only.

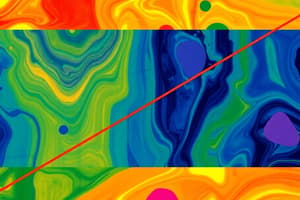

- The hypothesis function H of X is a function of the size of the house X, while the cost function J is a function of the parameter theta 1 controlling the slope of the straight line.

- Different values of theta 1 result in different straight line fits to the data, each corresponding to a specific J value.

- Plotting the cost function J against different values of theta 1 helps visualize how changing theta 1 impacts the cost.

- Minimizing J of theta 1 is the objective of the learning algorithm, aiming to find the best-fitting straight line for the data.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.