Podcast

Questions and Answers

What is the main binary language that computers utilize?

What is the main binary language that computers utilize?

- Binary (correct)

- Assembly

- Hexadecimal

- Octal

Which digit is NOT part of the binary system?

Which digit is NOT part of the binary system?

- 0

- 1

- 2 (correct)

- Both A and B

What method of counting is described using fingers in the content?

What method of counting is described using fingers in the content?

- Hexadecimal notation

- Unary notation (correct)

- Decimal notation

- Binary notation

What fundamental limitation do computers have compared to humans in counting?

What fundamental limitation do computers have compared to humans in counting?

In binary, how would the number two be represented?

In binary, how would the number two be represented?

Which technology allows for the creation of images, artwork, and movies with only zeros and ones?

Which technology allows for the creation of images, artwork, and movies with only zeros and ones?

What is the typical way humans count in contrast to computers?

What is the typical way humans count in contrast to computers?

Why might a computer write out leading zeros when representing numbers?

Why might a computer write out leading zeros when representing numbers?

What is the significance of binary 000 in a decimal context?

What is the significance of binary 000 in a decimal context?

Flashcards are hidden until you start studying

Study Notes

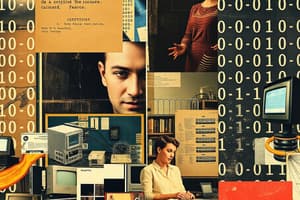

Computers and Binary Language

- Computers use a binary language consisting of only two digits: 0 and 1.

- This binary system allows computers to perform complex tasks, including calculations, text processing, image creation, and more.

- Humans use a decimal system with ten digits (0-9) for counting, while computers use a binary system with only two digits.

- To represent numbers in binary, computers use a sequence of 0s and 1s.

- For example, the number 0 in decimal is represented as 000 in binary, and 1 in decimal is represented as 001 in binary.

- Computers can represent larger numbers by using more digits in their binary representation.

- This system enables computers to count beyond the limitations of their binary digits.

- The ability for computers to represent numbers in binary using just 0 and 1 is a foundational aspect of computer science.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.