Podcast

Questions and Answers

Which of the following is the primary function of non-leaf nodes in a decision tree?

Which of the following is the primary function of non-leaf nodes in a decision tree?

- To represent the predicted outcome of a case.

- To perform a test on an attribute's value. (correct)

- To indicate the end of the classification process.

- To store the final classification decision.

What is the process for classifying a new instance using a decision tree?

What is the process for classifying a new instance using a decision tree?

- Begin at the root, apply tests at each node, and follow the branch corresponding to the outcome until a decision is reached. (correct)

- Apply all tests simultaneously and aggregate the results to determine the classification.

- Choose the shortest path from root to leaf based on instance attributes.

- Start at a random node and traverse until a leaf is found.

What characteristics make decision trees valuable in modern analytics?

What characteristics make decision trees valuable in modern analytics?

- Their exceptional predictive accuracy, surpassing most newer methods.

- Their wide availability, efficient implementations, and ability to handle various data types and missing data. (correct)

- Their ability to work exclusively with numerical attributes and complete datasets.

- Their complex structure, which enables them to model highly non-linear relationships in data.

In a decision tree, what do the leaves represent?

In a decision tree, what do the leaves represent?

When classifying an instance with a decision tree, what action is taken if a leaf node is encountered?

When classifying an instance with a decision tree, what action is taken if a leaf node is encountered?

Why is using an attribute like PESEL (or SSN) problematic for decision tree learning, despite yielding maximal information gain?

Why is using an attribute like PESEL (or SSN) problematic for decision tree learning, despite yielding maximal information gain?

What is a major drawback of using information gain as the sole criterion for selecting attributes in decision tree learning?

What is a major drawback of using information gain as the sole criterion for selecting attributes in decision tree learning?

What is the purpose of using gain ratio instead of information gain in decision tree learning?

What is the purpose of using gain ratio instead of information gain in decision tree learning?

The gain ratio is calculated by dividing the information gain Hgain(T) by what?

The gain ratio is calculated by dividing the information gain Hgain(T) by what?

What problem can arise when using gain ratio, and what is a common workaround?

What problem can arise when using gain ratio, and what is a common workaround?

According to the content, how does one mitigate the side effect produced as a problem of gain ratio?

According to the content, how does one mitigate the side effect produced as a problem of gain ratio?

Besides using gain ratio, what is another solution mentioned in the content to address the issue of tests with many outcomes?

Besides using gain ratio, what is another solution mentioned in the content to address the issue of tests with many outcomes?

What does the content suggest about complex or 'hacky' solutions often found in decision tree learning?

What does the content suggest about complex or 'hacky' solutions often found in decision tree learning?

Which of the following joint probability distributions satisfies the conditions where A and Y are statistically independent, B and Y are also statistically independent, but A and B together determine the value of Y?

Which of the following joint probability distributions satisfies the conditions where A and Y are statistically independent, B and Y are also statistically independent, but A and B together determine the value of Y?

Why might early stopping criteria in decision tree learning sometimes be ineffective?

Why might early stopping criteria in decision tree learning sometimes be ineffective?

What is the primary purpose of pruning in decision tree construction?

What is the primary purpose of pruning in decision tree construction?

How does the pruning strategy typically compare to the test selection criterion in terms of its impact on the quality of a decision tree?

How does the pruning strategy typically compare to the test selection criterion in terms of its impact on the quality of a decision tree?

During the pruning process, what label is assigned to a leaf node that replaces a pruned subtree?

During the pruning process, what label is assigned to a leaf node that replaces a pruned subtree?

In the iterative pruning algorithm described, what is the order in which nodes are considered for pruning?

In the iterative pruning algorithm described, what is the order in which nodes are considered for pruning?

Why is the error rate on the training set not a good estimate for pruning a decision tree?

Why is the error rate on the training set not a good estimate for pruning a decision tree?

Which of the following statements is true regarding estimating classification error during pruning?

Which of the following statements is true regarding estimating classification error during pruning?

What is the first step in the 1-SE rule for cost-complexity pruning?

What is the first step in the 1-SE rule for cost-complexity pruning?

What logical step follows after selecting the tree with the smallest validation error?

What logical step follows after selecting the tree with the smallest validation error?

How does the 1-SE rule determine which tree to prune?

How does the 1-SE rule determine which tree to prune?

What is a significant advantage of pruning a decision tree?

What is a significant advantage of pruning a decision tree?

Which method is NOT mentioned as a technique for handling missing values in decision trees?

Which method is NOT mentioned as a technique for handling missing values in decision trees?

What is the primary function of surrogate splits in decision tree algorithms?

What is the primary function of surrogate splits in decision tree algorithms?

When considering numerical attributes in decision trees, how is a splitting point determined?

When considering numerical attributes in decision trees, how is a splitting point determined?

What is a common issue that arises with missing values in datasets when applying machine learning algorithms?

What is a common issue that arises with missing values in datasets when applying machine learning algorithms?

What is the purpose of surrogate splits in decision trees?

What is the purpose of surrogate splits in decision trees?

What splitting criterion does the CART algorithm use?

What splitting criterion does the CART algorithm use?

Which algorithm is considered historically the first in decision trees?

Which algorithm is considered historically the first in decision trees?

What stop criterion does the CHAID algorithm use?

What stop criterion does the CHAID algorithm use?

Which of the following statements about C4.5 is correct?

Which of the following statements about C4.5 is correct?

What technique is used in CART to handle missing values?

What technique is used in CART to handle missing values?

Which development allows decision trees to accommodate numerical target variables?

Which development allows decision trees to accommodate numerical target variables?

Which of the following is a characteristic of the ID3 algorithm?

Which of the following is a characteristic of the ID3 algorithm?

Which statement regarding surrogate splits is true?

Which statement regarding surrogate splits is true?

What is the main disadvantage of the CHAID algorithm?

What is the main disadvantage of the CHAID algorithm?

Which of the following is NOT one of the primary approaches to decision tree pruning discussed?

Which of the following is NOT one of the primary approaches to decision tree pruning discussed?

In validation error based pruning, what is the primary purpose of the validation set?

In validation error based pruning, what is the primary purpose of the validation set?

During validation error based pruning, the algorithm iterates through nodes in which order?

During validation error based pruning, the algorithm iterates through nodes in which order?

What is the pruning criterion in validation error based pruning?

What is the pruning criterion in validation error based pruning?

What is a significant disadvantage of validation error based pruning, especially for smaller datasets?

What is a significant disadvantage of validation error based pruning, especially for smaller datasets?

In the context of pruning based on confidence intervals, what does 'nE' represent?

In the context of pruning based on confidence intervals, what does 'nE' represent?

According to Quinlan's method from 1987, what statistical distribution is used to model classification errors in leaves?

According to Quinlan's method from 1987, what statistical distribution is used to model classification errors in leaves?

When using normal approximation for confidence intervals in pruning, what is a limitation?

When using normal approximation for confidence intervals in pruning, what is a limitation?

Flashcards

Decision Trees

Decision Trees

A machine learning algorithm that uses a tree-like model for decision making.

Leaf Node

Leaf Node

A terminal node in a decision tree representing a decision outcome.

Non-Leaf Node

Non-Leaf Node

A node in a decision tree that contains a test or condition.

Classification Process

Classification Process

Signup and view all the flashcards

Implementation Versatility

Implementation Versatility

Signup and view all the flashcards

Information Gain

Information Gain

Signup and view all the flashcards

PESEL as a Test

PESEL as a Test

Signup and view all the flashcards

H(Y | PESEL)

H(Y | PESEL)

Signup and view all the flashcards

Gain Ratio

Gain Ratio

Signup and view all the flashcards

Problem with Information Gain

Problem with Information Gain

Signup and view all the flashcards

H(T) Low Problem

H(T) Low Problem

Signup and view all the flashcards

Average Information Gain

Average Information Gain

Signup and view all the flashcards

Binary Splits Solution

Binary Splits Solution

Signup and view all the flashcards

Joint Probability Distribution

Joint Probability Distribution

Signup and view all the flashcards

Statistical Independence

Statistical Independence

Signup and view all the flashcards

Pruning Decision Trees

Pruning Decision Trees

Signup and view all the flashcards

Majority Class

Majority Class

Signup and view all the flashcards

Pruning Algorithm Steps

Pruning Algorithm Steps

Signup and view all the flashcards

Classification Error Estimation

Classification Error Estimation

Signup and view all the flashcards

Early Stopping Criteria

Early Stopping Criteria

Signup and view all the flashcards

Node N in Pruning

Node N in Pruning

Signup and view all the flashcards

Validation Set

Validation Set

Signup and view all the flashcards

Pruning Due to Error

Pruning Due to Error

Signup and view all the flashcards

Advantages of Validation Pruning

Advantages of Validation Pruning

Signup and view all the flashcards

Disadvantage of Validation Pruning

Disadvantage of Validation Pruning

Signup and view all the flashcards

Quinlan's Confidence Interval Method

Quinlan's Confidence Interval Method

Signup and view all the flashcards

Exact Binomial Interval

Exact Binomial Interval

Signup and view all the flashcards

Normal Approximation

Normal Approximation

Signup and view all the flashcards

Cost-complexity pruning

Cost-complexity pruning

Signup and view all the flashcards

1-SE rule

1-SE rule

Signup and view all the flashcards

Numerical attributes in trees

Numerical attributes in trees

Signup and view all the flashcards

Potential splitting points

Potential splitting points

Signup and view all the flashcards

Missing values

Missing values

Signup and view all the flashcards

Weighting (Quinlan)

Weighting (Quinlan)

Signup and view all the flashcards

Surrogate splits

Surrogate splits

Signup and view all the flashcards

Missing Values in Decision Trees

Missing Values in Decision Trees

Signup and view all the flashcards

Weighted Voting

Weighted Voting

Signup and view all the flashcards

ID3 Algorithm

ID3 Algorithm

Signup and view all the flashcards

CHAID Algorithm

CHAID Algorithm

Signup and view all the flashcards

CART Algorithm

CART Algorithm

Signup and view all the flashcards

C4.5 Algorithm

C4.5 Algorithm

Signup and view all the flashcards

Regression Trees

Regression Trees

Signup and view all the flashcards

Model Trees

Model Trees

Signup and view all the flashcards

Missing Values Handling

Missing Values Handling

Signup and view all the flashcards

Study Notes

Decision Trees - A Classic ML Algorithm

- Decision trees are a classic machine learning algorithm.

- They were one of the first machine learning algorithms developed in the 1970s.

- They are still used today.

- Newer methods are often more accurate.

- Many software packages include implementations of decision trees.

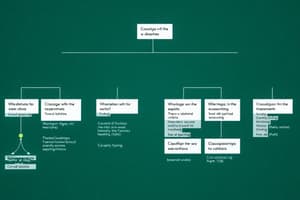

Decision Trees - What are They?

- Decision trees use a tree-like structure to classify instances.

- Leaves contain decisions.

- Non-leaf nodes contain tests.

- Classification process begins at the root.

- If the node is a leaf, the decision is returned.

- If it's a test node, evaluate the test and move down the corresponding branch.

- The process repeats recursively until a leaf node is reached.

Decision Trees - Background

- Decision trees are decent predictors.

- Good implementations are efficient and can handle various data types.

- An area of active research in the 1980s and 1990s.

- Many research papers were produced.

- Modern methods often combine several decision trees creating ensemble methods.

- Bagging, boosting and random forests are some ensemble methods.

- Methods are used for explainable machine learning.

Learning Decision Trees - High Level Algorithm

- If all records in a dataset have the same class, return a leaf node with that class.

- Choose a test for the root of the tree.

- Split the dataset into subsets based on the test's outcomes.

- Recursively build subtrees for each outcome until all records are classified.

How Decision Trees Partition the Sample Space

- Tests in decision trees involve single variables.

- They divide the sample space into (hyper)rectangles.

- The divisions are based on the values of single variables.

Choosing a Test in the Root of the Tree

- Many methods exist for selecting the best root test.

- A popular task is to pick a test which provides the most information.

- A test which provides the largest decrease in uncertainty about the target variable is also useful.

Basics of Information Theory

- Shannon's Information Theory defines the concept of entropy.

- A random variable X has distribution P(X) = (p1, ..., pk).

- Entropy of X is measured as H(X) = -Σi=1k pi log pi .

- Entropy measures the amount of information or uncertainty about a variable.

- This notion is an important concept.

Properties of Entropy

- 0 ≤ H(X) ≤ log k

- H(X) = 0 if one probability is 1 and all others are 0 (minimal uncertainty).

- H(X) = log k if all probabilities are equal (maximal uncertainty).

- Interpretation: average number of bits learned, given the outcome of a variable X.

Joint Entropy

- Joint entropy of two variables X and Y is the entropy of their joint distribution P(X, Y).

- H(X, Y) = − Σx Σy P(x, y) log P(x, y).

- H(X, Y) ≤ H(X) + H(Y).

- H(X, Y) = H(X) + H(Y) if and only if X and Y are independent.

Mutual Information

- Mutual information of X and Y is the difference between the entropy and the sum of entropies of X and Y

- I(X, Y) = H(X) + H(Y) – H(X, Y).

- Mutual information measures the amount of information X and Y share.

- I(X, Y) = 0 if X and Y are independent.

- I(X, X) = H(X).

Conditional Entropy

- Conditional entropy of X given Y = y is

- H(X|Y = y) = − Σx P(x|Y = y) log P(x|Y = y).

- The conditional entropy H(X|Y) takes the sum of each conditional entropy, multiplied by the probability of each output value of Y

- H(X|Y) = Σy P(Y = y)H(X|Y = y).

- If X ⊥ Y, then H(X|Y) = H(X).

Conditional Entropy – Properties

- If X ⊥ Y, then H(X|Y) = H(X) and H(Y|X) = H(Y).

- H(X|Y) ≤ H(X).

- H(X|Y) = H(X, Y) − H(Y).

Back to Decision Trees - Test Selection Criteria

- Select a test which offers the most information about Y.

- The aim is a test with the largest decrease in uncertainty about Y.

Information Gain

- Class entropy before the split: H(Y).

- Class entropy after the split on a test T is H(Y|T).

- Information gain is measured by Hgain(T) = H(Y) − H(Y|T).

Information Gain – Properties

- Information gain is non-negative.

- Information gain of 0 means Y is independent of T.

- Information gain is maximal if Y is a function of T (no more uncertainty).

Problems with Information Gain

- Information gain has a bias toward features with many values.

- A feature with unique values for each record could result in a high information gain but not be very useful.

Tests with Many Outcomes – Solution 1: Gain Ratio

- Gain ratio is a method proposed by Quinlan to address the bias in information gain which prefers attributes with many outcomes..

- Hratio(T) is calculated as Hgain(T)/H(T).

Gain Ratio – A Problem

- Gain ratio still has a side effect where a low entropy test may be selected, without giving much predictive value.

- A solution to counteract the bias in gain ratio is to average and select the gain ratio which is above the average.

Tests with Many Outcomes – Solution 2: Use Only Binary Splits

- The CART algorithm uses only binary tests.

- Numerical attributes are split using tests of the form X < a.

- Categorical attributes use tests of the form X ∈ B, where B ⊆ Dom(X).

Other Splitting Criteria - The Gini Index

- The Gini index is an alternative to entropy and similar.

- Gini(X) = 1 − Σi=1k pi2, where pi are the probabilities of each category.

- Gini(x) = 0 means minimum uncertainty and Gini(x )=1- means maximum uncertainty.

Other Splitting Criteria - The χ2 Test

- The chi-square test assesses independence between the target variable and a given test.

- A lower \p-value indicates a significant relationship.

- A popular method in the CHAID algorithm

Other Splitting Criteria

- Other splitting criteria exist in the literature.

- Many work equally well in practice.

Overfitting

- Overfitting occurs when a model adapts too closely to a specific dataset and fails to generalize to new data.

- A decision tree is likely to overfit if it is too complex for the data.

Statistical Issues in Decision Tree Learning

- As the decision tree gets deeper, the training sample size decreases.

- The decisions at deeper levels may be based on random factors, rather than true relationships between attributes.

- This leads to overfitting, because the model may be too influenced by the particularities of the training dataset.

Early Stopping Criteria

- Early stopping means stopping the tree construction early, to prevent overfitting.

- Criteria for stopping include: tree height exceeding a user-pecified value, number of training cases below some cutoff point, the gain being below a given threshold, not significant statistical correlation,

Pruning Decision Trees

- Pruning removes nodes or parts of the decision tree deemed unnecessary, so that the model does not overfit.

- Many approaches are there, including those that use different measurements of errors for different levels of accuracy or statistical confidence intervals for a node/subtree/leaf.

Pruning - Overview

- Pruning subtrees from the decision tree, by labeling leaves with the majority class, can result in smaller and statistically sound trees.

Validation Error Based Pruning

- Split the training data into training and validation sets.

- Validate the model to find the proper cost/complexity trade-off for the pruned tree.

- The algorithm iterates over nodes level by level.

- Prune nodes which increase validation error.

Pruning Based on Confidence Intervals

- Uses the original training data to estimate confidence intervals around errors.

- A standard pruning algorithm is applied based on the confidence level on the validation error.

Cost-Complexity Pruning

- Cost-complexity pruning builds a full tree that is then pruned back in stages with increasing complexity penalties or costs.

- The goal is to find a sequence of optimal pruned trees.

- The optimal subtree is selected to balance cost and complexity in the entire dataset.

Cost-Complexity Pruning – The 1-SE Rule

- The goal is to find optimal pruning by setting an alpha parameter for the pruning cost based on the best trade-off between the validation error and the standard deviation for other trees in the nested sequence.

Cost-Complexity Pruning – Example

- Decision tree pruning can often improve the accuracy of a model, particularly if overfitting occurs in the training dataset (i.e., it produces too much accurate predictions on the training set, but the accuracy decreases on an independent test).

Other Aspects of Decision Tree Learning

- Numerical attributes and handling missing values are different parts of how decision trees are built. These are additional characteristics of how decision trees are constructed.

Numerical Attributes

- Selecting the best split point for a numerical attribute involves sorting X values and selecting midpoints between consecutive different values as potential split points in the tree.

Missing Values

- Data often contains missing values, a problem for many algorithms.

- Techniques such as weighting and surrogate splits are employed to deal with missing values in decision trees, such that this data loss is compensated somehow.

Missing Values - Weighting

- Missing values in the test attribute are ignored when computing the splitting criteria.

- During the classification phase of a new record, records with missing values in the test attribute are sent down all branches of the tree, weighted according to the distribution of the training set outcomes.

Missing Values - Surrogate Splits

- Similar tests with strong correlations can be examined when a test node has missing values.

- This technique helps in tree interpretability, showing which attributes are equally important, in order to evaluate the data and find the causality relationship.

Concrete Algorithms – ID3

- One algorithm developed for decision trees that is important to review is ID3, developed by Quinlan

- Splitting criteria are based on information gain.

- This approach applies No pruning/early stopping to prevent overfitting, leading to complex trees.

Concrete Algorithms - CHAID

- CHAID (Chi-square Automatic Interaction Detector) was developed by Kass (1980).

- It is used in some business software due to its suitability for analyzing data in situations where there is little prior knowledge to start with.

Concrete Algorithms - CART

- CART (Classification and Regression Trees) was developed by Breiman et al. in 1984.

- This is a popular algorithm that uses Gini gain splitting criterion, with pruning procedures for better generalization, which can prevent overfitting.

Concrete Algorithms - C4.5

- C4.5 was another important development by Quinlan in 1986.

- Probably the most popular algorithm, it is based on the gain ratio and confidence interval-based pruning

- The most popular, but the details were not published before

Decision Trees – Further Developments

- Regression trees: deal with real numbers instead of categorical classes.

- More complex leaves: Model trees, which replace leaves with regression models or other models (e.g., a Naive Bayes classifier).

- More complex splits: allow splitting based on non-linear or non-unique attribute values (e.g., with conjunctions of variables, not just one variables at a time).

- Unbiased splits: use statistics-based methods to improve test selection, instead of directly using information gain

Regression Trees

- Decision trees can handle numerical target variables.

- Breiman, et al use variance as a splitting criterion within regression trees.

- Alternative methods of representing data points in leaves are also used within the algorithm

More Complex Leaves - Model Trees

- In model trees, each leaf node contains some class/regression model.

- (e.g.) Naive Bayesian classifiers, or logistic regression models. This method better represents the data at lower levels of complexity within the tree.

More Complex Splits

- Allows splitting on multiple variables at once.

- This may include linear or non-linear decisions, as long as they provide better results when predicting the target attribute.

Unbiased Decision Trees

- Splitting criteria are biased in favor of tests with many outcomes.

- Some criteria are computationally easier to use.

Unbiased Decision Trees - A Better Approach

- New unbiased approaches based on permutational tests, as presented in a 2006 publication by Hothorn, Hornik and Zeileis, in the context of R's party package.

Literature

- Some critical references concerning Decision Trees for review and further reading, as found in the slides.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.