Podcast

Questions and Answers

Which of the following is the primary goal of classification in data mining?

Which of the following is the primary goal of classification in data mining?

- To reduce the number of independent variables.

- To identify relationships between independent variables.

- To assign a class label to unseen records as accurately as possible. (correct)

- To predict the attributes of an observation.

Classification models are built using unsupervised learning techniques.

Classification models are built using unsupervised learning techniques.

False (B)

What is the term for the process of building a classification model from training data using a learning algorithm?

What is the term for the process of building a classification model from training data using a learning algorithm?

Induction

In decision trees, nodes associated with a question or query on an attribute are referred to as ______ nodes.

In decision trees, nodes associated with a question or query on an attribute are referred to as ______ nodes.

Which of the following is a common method for splitting nominal attributes in decision trees?

Which of the following is a common method for splitting nominal attributes in decision trees?

In decision tree induction, splitting strategies for continuous attributes automatically determine the splitting threshold without needing explicit determination.

In decision tree induction, splitting strategies for continuous attributes automatically determine the splitting threshold without needing explicit determination.

In the context of decision trees, what term describes the approach of selecting the attribute that provides the highest gain at a given node during the splitting process?

In the context of decision trees, what term describes the approach of selecting the attribute that provides the highest gain at a given node during the splitting process?

A measure based on the proportions across all classes that quantifies how heterogeneous (or “impure”) a certain mixture of classes is is known as ______.

A measure based on the proportions across all classes that quantifies how heterogeneous (or “impure”) a certain mixture of classes is is known as ______.

Match the impurity measures with their formulas:

Match the impurity measures with their formulas:

What condition signifies maximum entropy in the context of decision trees?

What condition signifies maximum entropy in the context of decision trees?

A node in a decision tree with only one class represented has maximum entropy.

A node in a decision tree with only one class represented has maximum entropy.

What metric is used to quantify the relative reduction in total entropy (i.e., the improvement in node purity after a split)?

What metric is used to quantify the relative reduction in total entropy (i.e., the improvement in node purity after a split)?

In the context of stopping criterion for growing a decision tree, if a node has only one class, it is called ______ node.

In the context of stopping criterion for growing a decision tree, if a node has only one class, it is called ______ node.

Which of the following is an advantage of decision trees?

Which of the following is an advantage of decision trees?

Decision trees implicitly ignore the attribute of lowest relevance by the DT induction methods.

Decision trees implicitly ignore the attribute of lowest relevance by the DT induction methods.

What method can be used to handle missing values in the building of decision trees?

What method can be used to handle missing values in the building of decision trees?

Referring back to training examples instead of building an explicit model of the data, kNN algorithm is known as a ______ learner.

Referring back to training examples instead of building an explicit model of the data, kNN algorithm is known as a ______ learner.

What needs to be carefully chosen depending on the data types (Euclidean distance)?

What needs to be carefully chosen depending on the data types (Euclidean distance)?

The Naive Bayes classifier assumes that the attributes are dependent

The Naive Bayes classifier assumes that the attributes are dependent

What technique can be used in the Naive Bayes classifier to prevent a posterior probability from always being zero when an attribute value doesn't occur with a given class in the training data?

What technique can be used in the Naive Bayes classifier to prevent a posterior probability from always being zero when an attribute value doesn't occur with a given class in the training data?

What does Bagging stand for?

What does Bagging stand for?

Random Forests build decision trees with limited depth, aiming for high bias and low variance.

Random Forests build decision trees with limited depth, aiming for high bias and low variance.

What type of error is quantified by the proportion of misclassifications occurring for samples belonging to the training data?

What type of error is quantified by the proportion of misclassifications occurring for samples belonging to the training data?

A situation when the model fits the data too well and sometimes has a poorer generalization performance than another model with a higher training error, known as ______.

A situation when the model fits the data too well and sometimes has a poorer generalization performance than another model with a higher training error, known as ______.

Which of the following is a method to avoid overfitting?

Which of the following is a method to avoid overfitting?

Data splitting is done to generate a model development and evaluate.

Data splitting is done to generate a model development and evaluate.

What is the main objective of data splitting?

What is the main objective of data splitting?

Failure to consider dependence structures between datasets may result ______, which results in inflated estimates of model performance.

Failure to consider dependence structures between datasets may result ______, which results in inflated estimates of model performance.

In cross-validation, what does each 'fold' represent?

In cross-validation, what does each 'fold' represent?

After estimating the generalisation error, it is common to build one final model using a training dataset.

After estimating the generalisation error, it is common to build one final model using a training dataset.

What is the term of split when splitting the data taking class based into consideration when sampling the data?

What is the term of split when splitting the data taking class based into consideration when sampling the data?

A matrix that tabulates the results of classification in its most general format is knows as a ______.

A matrix that tabulates the results of classification in its most general format is knows as a ______.

What are two parameters conventionally used in data mining to describe binary classifications?

What are two parameters conventionally used in data mining to describe binary classifications?

Accuracy and Sensitivity are directly proportional, implying that a higher accuracy always indicates higher sensitivity

Accuracy and Sensitivity are directly proportional, implying that a higher accuracy always indicates higher sensitivity

What metric represents the mean value between sensitivity and specificity?

What metric represents the mean value between sensitivity and specificity?

A situation when there are disproportionate number of instances that belong to different class is known as ______.

A situation when there are disproportionate number of instances that belong to different class is known as ______.

What is the main problem with using training examples in an imbalanced dataset?

What is the main problem with using training examples in an imbalanced dataset?

Accuracy is a good and reliable indicator for imbalanced classifiers.

Accuracy is a good and reliable indicator for imbalanced classifiers.

When building classifiers, what are the three approaches to dealing with class imbalances?

When building classifiers, what are the three approaches to dealing with class imbalances?

When there is undersampling and oversampling the the minority and majority class respectfully is known as a ______ approach.

When there is undersampling and oversampling the the minority and majority class respectfully is known as a ______ approach.

Synthetic Minority Oversampling Technique is a?

Synthetic Minority Oversampling Technique is a?

Flashcards

Classification

Classification

Predicting a nominal label based on other attributes.

Supervised learning

Supervised learning

Mapping attribute values to class values using labeled data.

Decision tree

Decision tree

A directed acyclical graph representing queries to attributes.

Root nodes

Root nodes

Signup and view all the flashcards

Internal nodes

Internal nodes

Signup and view all the flashcards

Leaf nodes

Leaf nodes

Signup and view all the flashcards

Binary trees

Binary trees

Signup and view all the flashcards

Tree induction algorithm

Tree induction algorithm

Signup and view all the flashcards

Splitting criterion

Splitting criterion

Signup and view all the flashcards

Entropy

Entropy

Signup and view all the flashcards

Greedy Approach

Greedy Approach

Signup and view all the flashcards

Information gain

Information gain

Signup and view all the flashcards

Stopping criterion

Stopping criterion

Signup and view all the flashcards

Implicit Impulation

Implicit Impulation

Signup and view all the flashcards

Test data

Test data

Signup and view all the flashcards

Lazy learner

Lazy learner

Signup and view all the flashcards

Training error

Training error

Signup and view all the flashcards

Generalization error

Generalization error

Signup and view all the flashcards

Data splitting

Data splitting

Signup and view all the flashcards

Holdout method

Holdout method

Signup and view all the flashcards

Cross-validation

Cross-validation

Signup and view all the flashcards

Stratified cross validation

Stratified cross validation

Signup and view all the flashcards

Class imbalance

Class imbalance

Signup and view all the flashcards

Data-level approaches

Data-level approaches

Signup and view all the flashcards

Model-level approaches

Model-level approaches

Signup and view all the flashcards

SMOTE

SMOTE

Signup and view all the flashcards

Accuracy

Accuracy

Signup and view all the flashcards

Confusion matrix

Confusion matrix

Signup and view all the flashcards

Precision

Precision

Signup and view all the flashcards

Matthew's Correlation Coefficient

Matthew's Correlation Coefficient

Signup and view all the flashcards

Study Notes

- Elizabeth Wanner can be contacted at [email protected].

- Thanks to Dr. Felipe Campelo for preparing the slides.

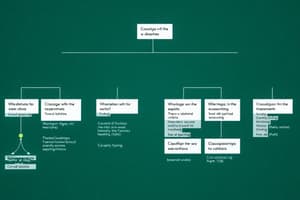

Outline of Topics

- Problem definition in classification

- Introduction to decision trees as a basic classifier

- Discussion of other classification methods

- Examination of data splitting and performance assessment techniques

- Addressing imbalanced classification scenarios

Problem Definition

- Classification predicts a nominal label (the class, or dependent variable) based on the values of other attributes (independent variables or predictors).

- Supervised learning is a type of learning where a model maps attribute values to class values, based on examples where the true Class value is known.

- Formally, given a collection of labelled records (training set) with a target variable (class), the task is to find a model that maps feature values to a class attribute

- The goal is to accurately assign class labels to unseen records.

- Classification is the task of learning a classification model, that maps each attribute vector x to one of the predefined class labels y.

- Table 3.1. provides examples of classification tasks

- Spam filtering

- Tumor identification

- Galaxy classification

- Table 3.2 is a sample data for the vertebrate classification problem

- The Vertebrate Name column would be useless for building a predictive model

General Classification Framework

- A modelling approach (a general model structure + a learning algorithm) is used

- The process of using a learning algorithm to build a classification model from the training data is induction.

- A set of data used for model induction (training data)

- A set of data used for assessing the model (test data)

- Some classifiers don't build an explicit model

- There are different approaches to training and testing

Important Considerations for Classification

- Models should be good at solving practical problems.

- Models should generate good predictions for unseen data

- Models must be tested on data that is:

- Representative of the data the model will encounter once deployed

- As unseen as possible

Decision Trees as a Basic Classifier

- Decision trees are classifiers commonly used to build more sophisticated ones

- A decision tree is a directed acyclical graph (or DAG) that represents a sequence of queries to single attributes.

- Each tree consists of three types of nodes:

- Root nodes: Zero incoming, zero or more outgoing links

- Internal nodes: Exactly one incoming, two or more outgoing links

- Leaf nodes: Exactly one incoming, zero outgoing links

- Most commonly used decision trees are built as binary trees.

- Root and internal nodes are associated with a question (a query on some attribute)

- Leaf nodes are associated with an answer (an inferred value for the class attribute).

Building Decision Trees

- Decision trees are built iteratively

- The algorithm determines:

- Whether to add a non-leaf or leaf node

- Which attribute to query if a non-leaf node is added

- How to use the attribute to split the data

- Building a decision tree requires training data (observations with known class labels) and a way to:

- Decide which attribute to query at each step

- Decide how to split the data based on that attribute

- Decide when to stop growing the tree

- Different tree induction algorithms and variants include:

- Hunt's Algorithm

- Ross Quinlan's methods (ID3, C4.5 / 4.8 / 5.0)

- Brieman's CART

- Methods from IBM (e.g., Mehta's SLIQ, Shaffer's SPRINT)

Splitting criteria in decision trees

- Binary attributes lead to simple yes/no, true/false questions.

- Nominal attributes can be split in a multiway or binary way (by turning n-ary questions into true/false ones).

- Splitting strategies for continuous attributes explicitly determine splitting thresholds

- Ordinal attributes can be treated as categorical (ignoring order) or semi-numerical (defining a threshold that considers ordering)

Attribute Test Condition Selection

- Any attribute can be selected as the splitting criterion at any node

- A greedy approach is usually followed: Select the one with the highest gain rather than overall tree condition

- The best split at a node separates the data into groups where a single class predominates in each group

Determining Impurity

- Let p(i | t) represent the proportion of records at a node t that belong to a class i.

- A measure is needed to quantify the heterogeneity (or “impurity”) of a certain mixture of classes.

- Common measures of impurity:

- Entropy

- Gini Index

- Misclassification Error

Examples of Entropy

- Maximum entropy occurs when all classes are equally represented, signifying largest variability and maximum impurity.

- Minimum entropy occurs when only a single class is represented in a group representing lowest variability and minimum impurity

- Intermediate values of entropy occur for different mixtures, depending on the heterogeneity of classes

Measuring Split Quality

- Split quality is measured by the relative reduction in total entropy

- Quantify improvement in node purity after a split.

- Data impurity at a node can be measured by the entropy of the data at that node.

- The total impurity of child nodes after a partition is measured by the total weighted entropy after the partition.

- Split quality is measured as the information gain of the split

- Calculate the difference between the impurity of the parent node and the weighted impurity of the child nodes.

- Gain(split) = Entropy(parent) – I(children)

- A tree induction algorithm uses the attribute with the highest gain at a given node to decide the best attribute for the split

When to Stop Growing a Decision Tree

- The DT induction algorithm needs to determine when it has reached a leaf node.

- Common criteria include:

- Impurity-based:

- Homogeneous node

- Impurity threshold

- Depth-based: Reach a maximum predefined tree depth

- Other criteria may be applicable

- Impurity-based:

Characteristics of Decision Trees

- Simple characteristics that provide practicality:

- Insensitive to data distribution/scale

- Computationally efficient

- Implicit attribute selection

Final Thoughts on Decision Trees

- There are many variants of decision trees, and different criteria for choosing splits, measures of impurity, and stop criteria

- DTs can deal with missing values by:

- Implicit imputation

- Treating missing values as a specific category

- Complex approaches, like using linear functions as split criteria, are possible but not widely used.

Other Classification Methods – K-Nearest Neighbours

- General idea: If it looks, walks, and quacks like a duck, then it is probably a duck.

- The basic algorithm requires:

- A set of stored records (training data)

- A dissimilarity measure

- A value of K, the number of nearest neighbours to retrieve

- To classify an unknown record:

- Compute dissimilarity to all training records

- Identify K nearest neighbours

- Use class labels of nearest neighbours to determine the class label of unknown record

K-Nearest Neighbours -- Main Issues

- Choosing K is crucial:

- Too small: classifier becomes sensitive to noise

- Too large: loses ability to identify local classes

- K can be learned from the data:

- Reserve a validation set

- Iteratively increase K, observe error measures, and pick K that provides the lowest error

- Dissimilarity measures need to be chosen based on data types, as kNN methods are sensitive to the attributes' relative scales

K-Nearest Neighbours Traits

- A lazy learner – refers directly to training examples whenever classifying a new observation

- Implementation is very easy

- It can be computationally expensive as each instance requires the computation of N dissimilarity values between training examples

- Missing values are not handled well and they interfere with distance calculations

- Can be made more robust

Naïve Bayes Classifier

- It uses Bayes' formula to calculate a value of probability for each class value (Hypothesis) given the attribute values (Evidence).

- P(H): Prevalence of C19 in the population

- P(E): Proportion of people that lose sense of taste and smell due to any cause

- P(E∣H): Proportion of people with C19 that lose sense of taste and smell

- P(H∣E): Updated belief that the patient really has C19

- The Naïve Bayes classifier calculates P(H|E) for all possible class values, and selects the one with the highest posterior probability.

- It assumes that attributes are independent, known as the naïve assumption

Naïve Bayes Classifier – Main Issues

- P(E∣H) calculation for continuous attributes can use:

- Attribute discretization

- Turning an interval/ratio variable into an ordinal one

- Density estimation methods

- Very robust to missing values

- The missing value is omitted from probability calculations

- When estimating conditional probabilities as part of the P(E | H) calculation, the zero-count problem may occur

- Use the Laplace estimator when calculating probabilities

Naïve Bayes Classifier traits

- Often performs surprisingly well even if the independence assumption is violated

- A maximum posterior probability is assigned to correct Class

- Problems are caused by adding too many redundant attributes

- Feature selection is suggested as a preprocessing step

Bagging

- Bootstrapping is a method of generating strong classifiers by training ensembles of low-performance methods

- The “weak” predictors can be any model if they:

- Perform better than random chance

- Are computationally cheap to train and use.

- Each weak model is trained on a bootstrap sample of the full training data

- Randomly sampling observations from the original data with the possibility of repetitions, until a set of the same size as the original is obtained

Random forests

- A common bagging method is the Random Forest, which is a bagging model built using decision trees

- Decision Trees are increased to the maximum depth, reaching homogeneity

- The DTs used have a low bias coupled with a high variance coupled to model aggregation

- Random forests perform sample the attributes without replacement to build each decision tree

- The goal is to have low correlation results that increase ensemble predictions

Assessing performance involves quantifying the accuracy of a classifier's predictions

- Training error: proportion of misclassifications that occur for samples within the training data

- Generalisation error. expected error of the model on previously unseen observations

Underfitting and overfitting, models that are too simple fail to capture data characteristics.

- High training and generalization errors occur

- As model complexity increases, these occur due to the reduction of errors

- This occurs when the model starts modelling noise over patterns of interest

- Limiting model complexity improves training

- Increase data set to resolve issues

Data Splitting

- The main purpose is to generate new models that offer good performance

- Estimating this error helps to achieve better unseen data that achieves low generalisation errors

- Models are split into different subsets due to the unfeasibility of collecting new data

- A portion is used for model development

- An independent portion is used for estimating model performance.

- Two approaches for splitting are:

- Holdout method

- Cross-validation

Holdout method

- Randomly splits data into training and test sets

- Performance observed on the test set is used to estimate the range of true error

- The objective is to be new data to achieve better results

- Failure to consider the potential of data leakage artificially inflates its estimated values

Cross Validation

- It is the most common approach to evaluating classifiers

- The aim is to use available data samples for learning a model

- Estimate generation performance

- Data is split equally into * k * sized subsets to called folds:

- Train model using data

- Estimate performance by averaging the average

Cross Validation - Considerations

- All issues for the holdout method also apply to CV

- Split the data using class balance

- Sampling data allows the ability for each fold to have the same proportion of all classes

- A method known as Stratified Cross Validation can help combine results

- After overestimating the generation error, a final model is built using a full data set since there's an increase is performance for models each time

Performance indicators

- Can be calculated in a variety of ways

- Not all performance indicators represent the same thing

- Commonly referred to in terms of positive and negative

- A confusion matrix tabulates the results of classification

- Commonly used performance indicators:

- Accuracy

- Sensitivity

- Specificity

- Precision

- Negative Predictive Value (NPV)

- Balanced Accuracy (BAcc)

- Matthews' Correlation Coefficient (MCC)

- Area under the ROC curve

- Area under the Precision-Recall curve

Class Skew

- In many data sets, there an disproportionate number of instances that belong to different classes

- Commonly known as class imbalance

- It is also common for the Class of interest to be a minority

- Insufficient data can lead to problems for * class of interest*

- Many measures of model quality are strongly affected by Class imbalance like in training samples

Dealing with class imbalance

- Three main approaches exist:

- Data-level approaches: Addresses the imbalance problem by changing the data sets

- Can utilize; undersampling of the majority class, oversampling of the minority class and synthetic data generation -Model-level approaches: which modify the way the models are trained to give greater focus to the minority class

- This can be achieved through - cost-sensitive learning or weighted objective generation -Hybrid Approaches: which combine elements under both factors

- Data-level approaches: Addresses the imbalance problem by changing the data sets

SMOTE Example

- An approach for dealing with inbalanced data

- Stands for Synthetic Minority Over sampling Technique

- Generates synthetic samples for the minority class to address the inbalanced number of variables

- Calculate distance between pairs

- Return set of synthetic minority samples

- SMOTE can work depending on the various common scenarios

- Variants that account for data dependencies do not currently exist

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.