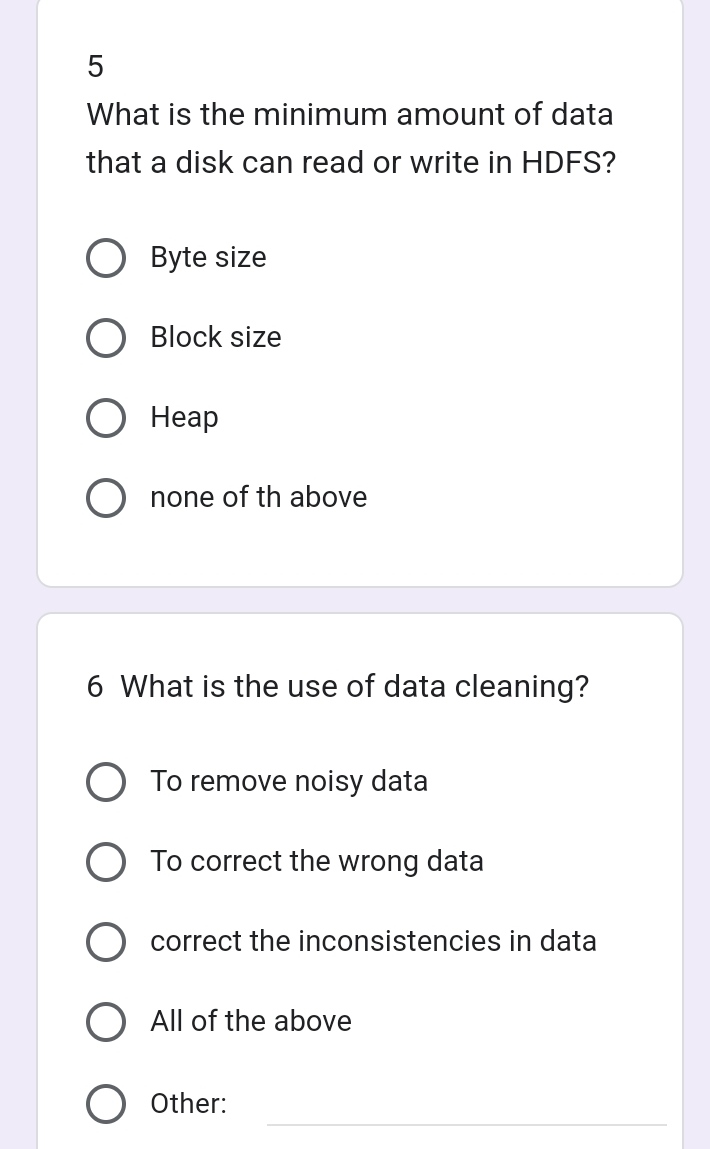

What is the minimum amount of data that a disk can read or write in HDFS? What is the use of data cleaning?

Understand the Problem

The questions are assessing knowledge related to Hadoop Distributed File System (HDFS) and data cleaning practices. The first question asks about the minimum data read/write unit in HDFS, while the second question focuses on the purpose of data cleaning.

Answer

Block size; All of the above

The final answer for question 5 is 'Block size', and for question 6 is 'All of the above'.

Answer for screen readers

The final answer for question 5 is 'Block size', and for question 6 is 'All of the above'.

More Information

The block size in HDFS is typically large, e.g., 128 MB, allowing efficient data storage and processing. Data cleaning involves removing errors and inconsistencies to improve data quality.

Tips

For question 5, ensure understanding that 'block size' is specific to HDFS. For question 6, remember that data cleaning encompasses multiple tasks like removing noise and correcting data.

Sources

- Top 50 Big Data MCQs - InterviewBit - interviewbit.com

- Introduction to Hadoop Distributed File System HDFS | CodeX - medium.com

AI-generated content may contain errors. Please verify critical information