Podcast

Questions and Answers

What does the binomial coefficient represent mathematically?

What does the binomial coefficient represent mathematically?

- The number of ways to choose k items from N (correct)

- The number of ways to arrange N items

- The expected value in a binary distribution

- The probability of success in a Bernoulli trial

Which distribution does the binomial distribution reduce to when N equals 1?

Which distribution does the binomial distribution reduce to when N equals 1?

- Poisson distribution

- Geometric distribution

- Bernoulli distribution (correct)

- Normal distribution

In the logistic function, what does the output range from?

In the logistic function, what does the output range from?

- -1 to 1

- 0 to ∞

- 0 to 1 (correct)

- -∞ to ∞

What role does the parameter ω play in the conditional probability distribution p(y|x, ω)?

What role does the parameter ω play in the conditional probability distribution p(y|x, ω)?

What is the Heaviside function primarily used to represent?

What is the Heaviside function primarily used to represent?

What characteristic do all datasets in the Datasaurus Dozen share?

What characteristic do all datasets in the Datasaurus Dozen share?

Which visualization technique can better distinguish differences in 1d data distributions?

Which visualization technique can better distinguish differences in 1d data distributions?

What is a key limitation mentioned regarding the violin plot visualization?

What is a key limitation mentioned regarding the violin plot visualization?

Bayes' theorem is compared to which theorem in geometry?

Bayes' theorem is compared to which theorem in geometry?

In the context of Bayesian inference, what does the term 'inference' refer to?

In the context of Bayesian inference, what does the term 'inference' refer to?

What is the purpose of the simulated annealing approach as mentioned in the content?

What is the purpose of the simulated annealing approach as mentioned in the content?

What do the central shaded parts of the box plots indicate?

What do the central shaded parts of the box plots indicate?

What kind of data is Bayes' rule primarily applied to?

What kind of data is Bayes' rule primarily applied to?

What is a random variable?

What is a random variable?

Which of the following best describes a discrete random variable?

Which of the following best describes a discrete random variable?

What does the probability mass function (pmf) compute?

What does the probability mass function (pmf) compute?

Which of the following statements is true regarding the properties of the pmf?

Which of the following statements is true regarding the properties of the pmf?

In the context of rolling a dice, which of the following represents the sample space?

In the context of rolling a dice, which of the following represents the sample space?

What is an example of a degenerate distribution?

What is an example of a degenerate distribution?

How is a continuous random variable defined?

How is a continuous random variable defined?

What does the event of 'seeing an odd number' represent if X is the outcome of a dice roll?

What does the event of 'seeing an odd number' represent if X is the outcome of a dice roll?

What does the variable $Y$ represent in the context of univariate Gaussians?

What does the variable $Y$ represent in the context of univariate Gaussians?

In the formulas provided, what does $V[X]$ represent?

In the formulas provided, what does $V[X]$ represent?

Which of the following statements about Anscombe’s quartet is true?

Which of the following statements about Anscombe’s quartet is true?

What do the terms $ heta_y$ and $

u_y$ likely refer to in the distribution $N(X| heta_y,

u_y)$?

What do the terms $ heta_y$ and $ u_y$ likely refer to in the distribution $N(X| heta_y, u_y)$?

What does the notation $E[Y|X]$ represent?

What does the notation $E[Y|X]$ represent?

Which equation highlights the relationship between the variance and the expectations of random variables?

Which equation highlights the relationship between the variance and the expectations of random variables?

What is likely the role of the hidden indicator variable $Y$ in the mixture model?

What is likely the role of the hidden indicator variable $Y$ in the mixture model?

What can we infer if the datasets in Anscombe's quartet appear visually different?

What can we infer if the datasets in Anscombe's quartet appear visually different?

How can the joint distribution of two random variables be represented when both have finite cardinality?

How can the joint distribution of two random variables be represented when both have finite cardinality?

What is the mathematical expression for obtaining the marginal distribution of variable X?

What is the mathematical expression for obtaining the marginal distribution of variable X?

What does it mean if two random variables, X and Y, are independent?

What does it mean if two random variables, X and Y, are independent?

How is the conditional distribution of Y given X defined mathematically?

How is the conditional distribution of Y given X defined mathematically?

What is the purpose of using the sum rule in probability?

What is the purpose of using the sum rule in probability?

Which of the following correctly summarizes the joint distribution in probabilistic terms?

Which of the following correctly summarizes the joint distribution in probabilistic terms?

In the context of joint distributions, what does the term 'marginal' refer to?

In the context of joint distributions, what does the term 'marginal' refer to?

How can the joint distribution table be restructured if the variables are independent?

How can the joint distribution table be restructured if the variables are independent?

What is the output of the sigmoid function when applied to a > 0?

What is the output of the sigmoid function when applied to a > 0?

How is the log-odds 'a' defined in relation to the probability 'p'?

How is the log-odds 'a' defined in relation to the probability 'p'?

Which function maps the log-odds 'a' back to probability 'p'?

Which function maps the log-odds 'a' back to probability 'p'?

In binary logistic regression, what form does the linear predictor take?

In binary logistic regression, what form does the linear predictor take?

What does the function p(y = 1|x, ω) represent in the context of the sigmoid function?

What does the function p(y = 1|x, ω) represent in the context of the sigmoid function?

What is the output of the logit function when applied to probability 'p'?

What is the output of the logit function when applied to probability 'p'?

Which of the following correctly describes the inverse relationship between the sigmoid and logit functions?

Which of the following correctly describes the inverse relationship between the sigmoid and logit functions?

What represents the probability distribution in binary logistic regression?

What represents the probability distribution in binary logistic regression?

Flashcards

Random Variable

Random Variable

A quantity whose value is unknown and can vary.

Sample Space

Sample Space

The set of all possible values that a random variable can take.

Event

Event

A specific outcome or set of outcomes from the sample space.

Discrete Random Variable

Discrete Random Variable

Signup and view all the flashcards

Probability Mass Function (PMF)

Probability Mass Function (PMF)

Signup and view all the flashcards

Continuous Random Variable

Continuous Random Variable

Signup and view all the flashcards

Uniform Distribution

Uniform Distribution

Signup and view all the flashcards

Degenerate Distribution

Degenerate Distribution

Signup and view all the flashcards

Joint Distribution

Joint Distribution

Signup and view all the flashcards

Marginal Distribution

Marginal Distribution

Signup and view all the flashcards

Conditional Distribution

Conditional Distribution

Signup and view all the flashcards

Independence of Random Variables

Independence of Random Variables

Signup and view all the flashcards

Product Rule for Independent Variables

Product Rule for Independent Variables

Signup and view all the flashcards

2D Joint Probability Table

2D Joint Probability Table

Signup and view all the flashcards

1D Marginal Probability Vector

1D Marginal Probability Vector

Signup and view all the flashcards

Rule of Total Probability

Rule of Total Probability

Signup and view all the flashcards

Expected Value (E[X])

Expected Value (E[X])

Signup and view all the flashcards

Variance (Var[X])

Variance (Var[X])

Signup and view all the flashcards

Standard Deviation (SD[X])

Standard Deviation (SD[X])

Signup and view all the flashcards

Conditional Expectation (E[X|Y])

Conditional Expectation (E[X|Y])

Signup and view all the flashcards

Conditional Variance (Var[X|Y])

Conditional Variance (Var[X|Y])

Signup and view all the flashcards

Law of Total Expectation

Law of Total Expectation

Signup and view all the flashcards

Mixture Distribution

Mixture Distribution

Signup and view all the flashcards

Sigmoid function

Sigmoid function

Signup and view all the flashcards

Binomial Distribution

Binomial Distribution

Signup and view all the flashcards

Bernoulli Distribution

Bernoulli Distribution

Signup and view all the flashcards

Heaviside Function

Heaviside Function

Signup and view all the flashcards

Conditional Probability

Conditional Probability

Signup and view all the flashcards

Violin Plot

Violin Plot

Signup and view all the flashcards

Simulated Annealing

Simulated Annealing

Signup and view all the flashcards

Inter-Quartile Range (IQR)

Inter-Quartile Range (IQR)

Signup and view all the flashcards

Box Plot

Box Plot

Signup and view all the flashcards

Bayesian Inference

Bayesian Inference

Signup and view all the flashcards

Bayes’ Theorem

Bayes’ Theorem

Signup and view all the flashcards

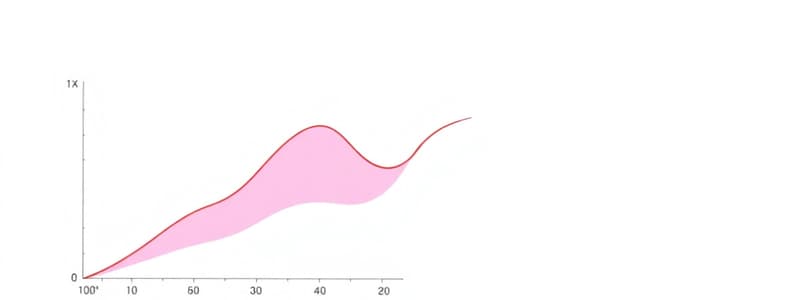

Kernel Density Estimate

Kernel Density Estimate

Signup and view all the flashcards

p(y = 1|x, ω)

p(y = 1|x, ω)

Signup and view all the flashcards

Log-Odds (a)

Log-Odds (a)

Signup and view all the flashcards

Logistic Function

Logistic Function

Signup and view all the flashcards

Logit Function

Logit Function

Signup and view all the flashcards

Binary Logistic Regression

Binary Logistic Regression

Signup and view all the flashcards

Linear Predictor (f(x; ω))

Linear Predictor (f(x; ω))

Signup and view all the flashcards

Conditional Bernoulli Model

Conditional Bernoulli Model

Signup and view all the flashcards

Study Notes

Probability: Univariate Models

- Probability theory is common sense reduced to calculation.

- Two interpretations of probability exist: frequentist and Bayesian.

- Frequentist interpretation: probability represents long-run frequencies of events.

- Bayesian interpretation: probability quantifies uncertainty or ignorance about something.

- Bayesian interpretation models uncertainty about one-off events.

- Basic rules of probability theory remain consistent despite differing interpretations.

- Uncertainty can stem from ignorance (model uncertainty) or intrinsic variability (data uncertainty).

Probability as an Extension of Logic

- Probability extends Boolean logic.

- An event (A) can either hold or not hold.

- Pr(A) represents the probability of event A being true.

- Values range from 0 to 1 (inclusive).

- Pr(A) = 0 means event A will not happen, Pr(A) = 1 means event A will happen.

Probability of Events

- Joint probability: Pr(A, B) or Pr(AB) is the probability of both A and B occurring.

- If A and B are independent, then Pr(A, B) = Pr(A) x Pr(B).

- Conditional probability: Pr(B|A) is the probability of B happening given A has occurred.

- Pr(B|A) = Pr(A, B)/Pr(A)

- Conditional independence: events A and B are conditionally independent given C if Pr(A, B|C)= Pr(A|C) x Pr(B|C).

Random Variables

- Random variables (r.v.) are unknown or changeable quantities.

- Sample space: the set of possible values of a random variable.

- Events are subsets of outcomes in a given sample space,

- Discrete random variables have finite or countably infinite sample spaces.

- Continuous random variables take on any value within a given range.

Cumulative Distribution Function (CDF)

- Cumulative distribution function (CDF) of a random variable X, denoted by P(x), is the probability that X takes on a value less than or equal to x.

- P(x) = Pr(X ≤ x)

- Pr (a ≤ X ≤ b) = P(b) – P(a)

Probability Density Function (PDF)

- Probability density function is derived from the CDF.

- PDF is the derivative of the CDF.

- Pr (a ≤ X ≤ b) = integral of p(x) dx from a to b

Quantiles

- Quantile function is the inverse of the CDF.

- P-¹ (q) is the value x such that Pr (X ≤ xq) = q

Moments of a Distribution

- Mean (μ): the expected value of a distribution.

- E [X] = integral(x * p(x) dx) for continuous rv's.

- E [X] = Σ (x * p(x)) for discrete rv's.

- Variance (σ²): the expected squared deviation from the mean.

- V [X] = E [(X - μ)²]

- Standard Deviation (σ): the square root of the variance.

- Mode: the value with the highest probability or probability density.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.