Podcast

Questions and Answers

What is the initial value of 𝜃 used in Newton's method according to the content?

What is the initial value of 𝜃 used in Newton's method according to the content?

- 1.8

- 1.3

- 4.5 (correct)

- 2.8

Newton's method can only be used for finding roots of functions, not for maxima.

Newton's method can only be used for finding roots of functions, not for maxima.

False (B)

What is the relationship between the first derivative of a function and its maxima?

What is the relationship between the first derivative of a function and its maxima?

The first derivative is zero at maxima.

In Newton's method, the next guess for 𝜃 updates using the formula 𝜃 := 𝜃 - _____ , where _____ is the first derivative of the function.

In Newton's method, the next guess for 𝜃 updates using the formula 𝜃 := 𝜃 - _____ , where _____ is the first derivative of the function.

Match the following methods/terms with their descriptions:

Match the following methods/terms with their descriptions:

What is the output of logistic regression based on the given hypothesis?

What is the output of logistic regression based on the given hypothesis?

In binary classification, the target variable can take more than two values.

In binary classification, the target variable can take more than two values.

What is the main purpose of logistic regression in machine learning?

What is the main purpose of logistic regression in machine learning?

The logistic function is also known as the __________ function.

The logistic function is also known as the __________ function.

Match the following terms related to logistic regression with their descriptions:

Match the following terms related to logistic regression with their descriptions:

Which of the following is NOT a property of the logistic function?

Which of the following is NOT a property of the logistic function?

In logistic regression, the sum of probabilities for all classes equals 2.

In logistic regression, the sum of probabilities for all classes equals 2.

Explain why linear regression performs poorly for binary classification.

Explain why linear regression performs poorly for binary classification.

What is the primary goal of the maximum likelihood principle in logistic regression?

What is the primary goal of the maximum likelihood principle in logistic regression?

Gradient ascent is used to minimize likelihood functions in logistic regression.

Gradient ascent is used to minimize likelihood functions in logistic regression.

What is the update formula used in gradient ascent for logistic regression?

What is the update formula used in gradient ascent for logistic regression?

In logistic regression, to make calculations simpler, instead of maximizing the likelihood 𝐿(𝜃), we maximize the ________ likelihood ℓ(𝜃).

In logistic regression, to make calculations simpler, instead of maximizing the likelihood 𝐿(𝜃), we maximize the ________ likelihood ℓ(𝜃).

Match the algorithms to their purposes:

Match the algorithms to their purposes:

What is the result of applying Newton's Method in optimization?

What is the result of applying Newton's Method in optimization?

The update formula for Newton's Method includes a negative sign because we are minimizing a function.

The update formula for Newton's Method includes a negative sign because we are minimizing a function.

What is the stochastic gradient ascent rule primarily used for in logistic regression?

What is the stochastic gradient ascent rule primarily used for in logistic regression?

Flashcards

Classification

Classification

A type of machine learning where the target variable is a discrete category.

Binary Classification

Binary Classification

A classification problem where the target variable can only take two possible values, typically labeled as 0 or 1.

Logistic Regression

Logistic Regression

A type of classification algorithm used for binary classification problems. It predicts the probability of an event occurring based on input features. It uses a sigmoid function to map output values between 0 and 1, representing the likelihood of the positive class.

Sigmoid (Logistic) Function

Sigmoid (Logistic) Function

Signup and view all the flashcards

Hypothesis (ℎ𝜃(𝑥))

Hypothesis (ℎ𝜃(𝑥))

Signup and view all the flashcards

Logistic Regression Training

Logistic Regression Training

Signup and view all the flashcards

Binary Classification Evaluation

Binary Classification Evaluation

Signup and view all the flashcards

Gradient Descent

Gradient Descent

Signup and view all the flashcards

Newton's Method

Newton's Method

Signup and view all the flashcards

Newton's Method Update Rule

Newton's Method Update Rule

Signup and view all the flashcards

Newton's Method for Maximization

Newton's Method for Maximization

Signup and view all the flashcards

Newton's Method & Optimal Learning Rate

Newton's Method & Optimal Learning Rate

Signup and view all the flashcards

Newton-Raphson Method

Newton-Raphson Method

Signup and view all the flashcards

Likelihood Function (L(𝜃))

Likelihood Function (L(𝜃))

Signup and view all the flashcards

Maximum Likelihood Estimation

Maximum Likelihood Estimation

Signup and view all the flashcards

Log Likelihood (ℓ(𝜃))

Log Likelihood (ℓ(𝜃))

Signup and view all the flashcards

Stochastic Gradient Ascent

Stochastic Gradient Ascent

Signup and view all the flashcards

Stochastic Gradient Ascent Update Rule (Logistic Regression)

Stochastic Gradient Ascent Update Rule (Logistic Regression)

Signup and view all the flashcards

Generalized Linear Model (GLM)

Generalized Linear Model (GLM)

Signup and view all the flashcards

Study Notes

Introduction to Machine Learning, AI 305

- Logistic Regression is a supervised learning technique for classification.

- Previous week's topics covered linear regression, including linear hypothesis models, cost functions, gradient descent, least mean square (LMS) and normal equations.

- This week's topics include binary classification, logistic regression, cost function, Newton's method and multiclass classification.

Binary Classification

- In classification, the target variable (y) represents a discrete class, such as apartment, studio or house.

- In binary classification, y can take only two values: 0 or 1.

- Examples include email classification (spam/not spam) and tumor classification (malignant/benign).

- y ∈ {0, 1}

- 0 represents the negative class.

- 1 represents the positive class.

Linear Regression for Binary Classification

- Using linear regression for binary classification is problematic as outliers negatively impact predicted results.

- The hypothesis function should appropriately model probability within 0 and 1.

- Logistic function solutions address this.

Logistic Regression

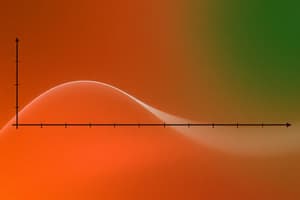

- Logistic regression uses a logistic function or sigmoid function as the hypothesis for binary classification.

- The logistic function is: hθ(x) = g(θTx) = 1 / (1 + e-θTx)

- where z = -θTx

- and g(z) = 1 / (1 + e-z)

- g(z) maps any real number to the interval (0, 1), representing the probability.

Logistic Regression - Derivatives

- The derivative of the logistic function is: g'(z) = g(z) (1 - g(z))

Logistic Regression - Probability

- hθ(x) represents the probability that the output is 1.

- If hθ(x) = 0.7, there's a 70% probability the output is 1.

- The probability of 0 is 1 - hθ(x).

Logistic Regression - Likelihood Function

- The likelihood function, L(θ), is a function of y given x for fixed θ.

- L(θ) = L(θ; X, y) = p(y|X; θ).

Logistic Regression - Likelihood of Parameters

- Assuming independent training examples, the likelihood of parameters θ is:

- L(θ) = ∏i=1n p(y(i)|x(i); θ) = ∏i=1n (hθ(x(i)))y(i) (1 - hθ(x(i)))1-y(i)

Objective Function

- The objective is to choose θ to maximize the likelihood function (L(θ)) for the given data.

- The objective function maximizes the data likelihood as much as possible.

Objective Function - Maximization

- Maximizing L(θ) is equivalent to maximizing the log likelihood l(θ)=log L(θ) .

- Log likelihood functions use simpler derivations.

Gradient Ascent

- To maximize the likelihood, use gradient ascent similar to the linear regression method.

- θj:=θj+α∇l(θ)j.

- The positive sign is used because we maximize the function.

Gradient Ascent - Stochastic

- Using gradient ascent with one training example (x, y) produces the stochastic gradient ascent rule.

Gradient Ascent - Vectorized

- A vectorized implementation is θ:=θ+αXT(y-g(Xθ))

Newton's Method

- A different algorithm for maximizing l(θ)

- Newton's method was initially for finding roots f(θ)=0 where θ ∈ R .

- Using the update rule: θ: = θ –f(θ) / f'(θ)

Newton's Method - Linear Approximation

- Approximates a non-linear function, f, as a linear function tangent to f at current θ.

- Finds the next θ where the tangent line crosses the zero axis

Newton's Method - Example

- Illustrates using the update rule several times to converge towards f(θ) =0

Newton's Method - Maximization

- Maximizing l(θ) : let f(θ) = l(θ), and use the update rule to approach θ values where the first derivative l'(θ) = 0.

Newton's Method - Quadratic Approximation

- Approximates l(θ) by Taylor expansion around current θ value., then maximize.

- Finding the θ where gradients equal 0 completes the update

Newton's Method - Optimal Learning Rate

- Newton's method can be considered a method for finding the learning rate of gradient descent, a parameter of gradient descent.

Newton-Raphson Method

-

Generalization of Newton's method to higher dimensions is Newton-Raphson method.

-

Update θ by θ: = θ –H-1∇l(θ)

- ∇l(θ): vector of partial derivatives of l(θ) with respect to θj’s

- H:d-by-d Hessian matrix

- Hessian entries are given by Hij = ∂²l(θ)/ ∂θiθj

Newton's Method Advantages

- Faster convergence than batch gradient descent.

- Fewer iterations to reach minimum

Newton's Method Disadvantages

- Requires a more extensive computation (finding and inverting a d-by-d Hessian).

- Still quite fast if dimensions are not too high.

Fisher Scoring Method

- When applying Newton's method to the logistic regression likelihood, resulting approach is Fisher scoring.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.