Podcast

Questions and Answers

What is a primary goal of programming heterogeneous parallel computing systems?

What is a primary goal of programming heterogeneous parallel computing systems?

- Simplifying programming for sequential tasks

- Increasing single-thread performance

- Achieving high performance and energy-efficiency (correct)

- Eliminating the need for multiple processors

Which of the following best describes the concept of scalability in heterogeneous parallel computing?

Which of the following best describes the concept of scalability in heterogeneous parallel computing?

- Ability to run programs on a single core without modification

- Requirement for programs to be vendor-specific

- Capability to maintain performance as hardware resources increase (correct)

- Limitation to only small-scale applications

Which parallel programming model is known for its ability to support a wide range of hardware architectures while allowing for high-level programming?

Which parallel programming model is known for its ability to support a wide range of hardware architectures while allowing for high-level programming?

- CUDA C

- MPI

- OpenCL (correct)

- OpenACC

What is one of the key principles for understanding CUDA memory models?

What is one of the key principles for understanding CUDA memory models?

Which aspect is NOT a focus when learning about parallel algorithms in this course?

Which aspect is NOT a focus when learning about parallel algorithms in this course?

What does Unified Memory in CUDA primarily aim to achieve?

What does Unified Memory in CUDA primarily aim to achieve?

Which aspect is NOT typically associated with OpenCL?

Which aspect is NOT typically associated with OpenCL?

In the context of parallel programming, what is the significance of Dynamic Parallelism?

In the context of parallel programming, what is the significance of Dynamic Parallelism?

What is a primary function of CUDA streams in achieving task parallelism?

What is a primary function of CUDA streams in achieving task parallelism?

How does OpenACC primarily optimize parallel computing?

How does OpenACC primarily optimize parallel computing?

Which feature is NOT part of the CUDA memory model?

Which feature is NOT part of the CUDA memory model?

The implementation of MPI in joint MPI-CUDA programming primarily facilitates what?

The implementation of MPI in joint MPI-CUDA programming primarily facilitates what?

Which application case study demonstrates the use of Electrostatic Potential Calculation?

Which application case study demonstrates the use of Electrostatic Potential Calculation?

What is a significant benefit of using parallel scan algorithms in CUDA?

What is a significant benefit of using parallel scan algorithms in CUDA?

In the context of the advanced CUDA memory model, what is the function of texture memory?

In the context of the advanced CUDA memory model, what is the function of texture memory?

Which of the following describes a key distinction between OpenCL and OpenACC?

Which of the following describes a key distinction between OpenCL and OpenACC?

What is the primary goal of memory coalescing in CUDA?

What is the primary goal of memory coalescing in CUDA?

What is the main characteristic of the work-efficient parallel scan kernel?

What is the main characteristic of the work-efficient parallel scan kernel?

What kind of performance consideration is essential when using a tiled convolution approach?

What kind of performance consideration is essential when using a tiled convolution approach?

In the context of atomic operations within CUDA, what is a common issue that atomicity can help resolve?

In the context of atomic operations within CUDA, what is a common issue that atomicity can help resolve?

What is a common performance consideration when implementing a basic reduction kernel?

What is a common performance consideration when implementing a basic reduction kernel?

What does the term 'data movement API' typically refer to in the context of GPU architecture?

What does the term 'data movement API' typically refer to in the context of GPU architecture?

Which of the following best describes the purpose of the histogram example using atomics?

Which of the following best describes the purpose of the histogram example using atomics?

Study Notes

Course Introduction and Overview

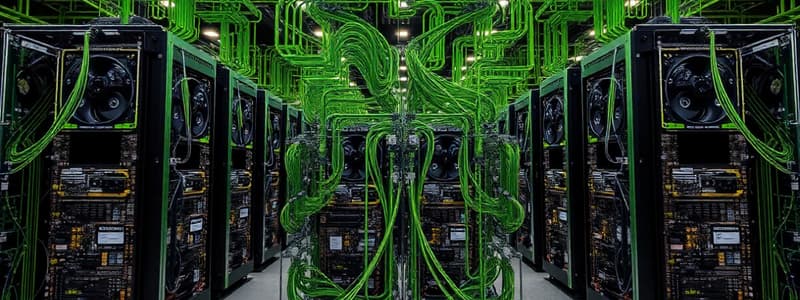

- The course aims to teach students how to program heterogeneous parallel computing systems. The main focus is on high performance, energy-efficiency, functionality, maintainability, scalability and portability across vendor devices.

- The course covers parallel programming APIs tools and techniques, principles and patterns of parallel algorithms, processor architecture features and constraints.

People

- Professors and Instructors include: Wen-mei Hwu, David Kirk, Joe Bungo, Mark Ebersole, Abdul Dakkak, Izzat El Hajj, Andy Schuh, John Stratton, Issac Gelado, John Stone, Javier Cabezas and Michael Garland.

Course Content

- The course covers the following Modules:

- Module 1: Introduction to Heterogeneous Parallel Computing, CUDA C vs.CUDA Libs vs.Unified Memory, Pinned Host Memory

- Module 2: Memory Allocation and Data Movement API Functions, Introduction to CUDA C, Kernel-Based SPMD Parallel Programming

- Module 3: Multidimensional Kernel Configuration, CUDA Parallelism Model, CUDA Memories, Tiled Matrix Multiplication

- Module 4: Handling Boundary Conditions in Tiling, Tiled Kernel for Arbitrary Matrix Dimensions, Histogram (Sort) Example

- Module 5: Basic Matrix-Matrix Multiplication Example, Thread Scheduling, Control Divergence

- Module 6: DRAM Bandwidth, Memory Coalescing in CUDA

- Module 7: Atomic Operations

- Module 8: Convolution, Tiled Convolution, 2D Tiled Convolution Kernel

- Module 9: Tiled Convolution Analysis, Data Reuse in Tiled Convolution

- Module 10: Reduction, Basic Reduction Kernel, Improved Reduction Kernel, Scan (Parallel Prefix Sum)

- Module 11: Work-Inefficient Parallel Scan Kernel, Work-Efficient Parallel Scan Kernel, More on Parallel Scan

- Module 12: Scan Applications: Per-thread Output Variable Allocation, Scan Applications: Radix Sort, Performance Considerations (Histogram (Atomics) Example), Performance Considerations (Histogram (Scan) Example), Advanced CUDA Memory Model

- Module 13: Constant Memory, Texture Memory

- Module 14: Floating Point Precision Considerations, Numerical Stability

- Module 15: GPU as part of the PC Architecture

- Module 16: Data Movement API vs.GPU Teaching Kit, Accelerated Computing

- Module 17: Application Case Study: Advanced MRI Reconstruction

- Module 18: Application Case Study: Electrostatic Potential Calculation (part 1), Electrostatic Potential Calculation (part 2)

- Module 19: Computational Thinking for Parallel Programming, Joint MPI-CUDA Programming

- Module 20: Joint MPI-CUDA Programming (Vector Addition - Main Function), Joint MPI-CUDA Programming (Message Passing and Barrier), Joint MPI-CUDA Programming (Data Server and Compute Processes), Joint MPI-CUDA Programming (Adding CUDA), Joint MPI-CUDA Programming (Halo Data Exchange)

- Module 21: CUDA Python Using Numba

- Module 22: OpenCL Data Parallelism Model, OpenCL Device Architecture, OpenCL Host Code (Part 1), OpenCL Host Code (Part 2)

- Module 23: Introduction to OpenACC, OpenACC Subtleties

- Module 24: OpenGLand CUDA Interoperability

- Module 25: Effective use of Dynamic Parallelism, Advanced Architectural Features: Hyper-Q

- Module 26: Multi-GPU

- Module 27: Example Applications Using Libraries: CUBLAS, CUFFT, CUSOLVER

- Module 28: Advanced Thrust

- Module 29: Other GPU Development Platforms: QwickLABS, Where to Find Support

GPU Teaching Kit

- The GPU Teaching Kit is licensed by NVIDIA and the University of Illinois under the Creative Commons Attribution-NonCommercial 4.0 International License.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.

Related Documents

Description

This quiz covers the foundational concepts of heterogeneous parallel computing. Students will explore programming APIs, parallel algorithms, and the architectural features relevant to high-performance and energy-efficient computing systems. Module-specific principles and tools will also be examined.