Podcast

Questions and Answers

What is the role of Faculty in the field of AI?

What is the role of Faculty in the field of AI?

To make AI impactful and trustworthy

How many organizations has Faculty worked with?

How many organizations has Faculty worked with?

Over 200

What is the purpose of hierarchical modeling according to the text?

What is the purpose of hierarchical modeling according to the text?

Dealing with categorical features, whether nested or not

What does Bayesian inference involve?

What does Bayesian inference involve?

Which algorithm is mentioned as key for sampling from posterior distributions?

Which algorithm is mentioned as key for sampling from posterior distributions?

Explain the limitation of complete pooling approach in modeling hierarchical data.

Explain the limitation of complete pooling approach in modeling hierarchical data.

What Python library can be used for implementing hierarchical models?

What Python library can be used for implementing hierarchical models?

Why are hierarchical models important when dealing with categorical information?

Why are hierarchical models important when dealing with categorical information?

What does partial pooling represent in hierarchical models?

What does partial pooling represent in hierarchical models?

How does the plate context manager in Numpyro automate parameter definition in models?

How does the plate context manager in Numpyro automate parameter definition in models?

What distribution is given to sigma to ensure variance can vary widely?

What distribution is given to sigma to ensure variance can vary widely?

What is the trade-off between variational inference and MCMC regarding computational speed and correctness assurance?

What is the trade-off between variational inference and MCMC regarding computational speed and correctness assurance?

Flashcards are hidden until you start studying

Study Notes

- The speaker is Omar, a data scientist at Faculty, an artificial intelligence company with a mission to make AI impactful and trustworthy.

- Faculty has worked with over 200 organizations in both public and private sectors.

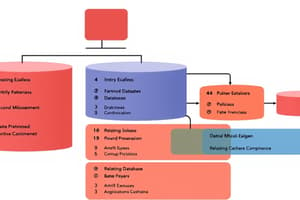

- The talk covers hierarchical modeling, Bayesian inference basics, and two common approaches for modeling hierarchical data.

- Hierarchical models are introduced as a solution for dealing with categorical features, whether nested or not.

- The example of the "Radon in Minnesota" case study is used to illustrate the importance of hierarchical models in handling data from different counties with varying data sizes.

- Bayesian inference involves combining likelihood functions (model assumptions) with prior distributions to form posterior distributions, which help in making predictions and drawing conclusions from the data.

- Markov Chain Monte Carlo (MCMC) is a key algorithm used for sampling from posterior distributions, especially in high-dimensional problems.

- The limitations of complete pooling and no pooling approaches are discussed in the context of modeling hierarchical data.

- Complete pooling involves treating all groups as one, while no pooling treats each group independently, showing the need for a hierarchical modeling approach.

- To implement hierarchical models, Python libraries like NumPyro can be used for encoding models and running MCMC simulations.- The speaker is using a plate context manager provided by Numpyro to automate the process of defining parameters for each county in a model.

- Samples drawn from the model now include 87 alphas and betas for each county, leading to a distribution of possible lines for each county.

- The speaker showcases histograms representing uncertainties in the estimates for different counties, highlighting high uncertainty in some cases.

- Introduces the concept of hierarchical models as a way to share information across groups and avoid useless inferences with too much uncertainty.

- Discusses the concept of partial pooling as a continuum between complete pooling and no pooling approaches.

- Explains how hierarchical models introduce correlation between group-level parameters by using latent parameters.

- Describes the process of incorporating partial pooling in the model by specifying the amount of pulling and introducing priors for new parameters.

- Shows how different amounts of pulling affect the estimates for each county in the model, allowing for a balance between individual estimates and shared information.

- Demonstrates how hierarchical models help in obtaining more precise estimates by sharing information across groups and controlling inferences.

- Highlights the importance of hierarchical models when dealing with categorical information to prevent overfitting and incorporate different data sources effectively.- Sigma is given an inverse gamma distribution to ensure variance is not zero but can vary widely due to the flat right tail of the distribution.

- Numpyro was chosen over Stan at Faculty for Bayesian modeling due to its Python-based nature, offering flexible interfaces for MCMC and variational inference without the need for a C++ compiler.

- Changing the prior in a model can impact the results, but if the prior is not highly informative, the posterior distributions tend to remain similar.

- Pooling parameters across different groups can be done separately if there is additional information distinguishing the groups, requiring a more complex structure.

- Variational inference is quicker for big data with simpler calculations compared to MCMC, but it may not always guarantee convergence to the correct answer, prompting the use of MCMC for verification.

- There is a trade-off between the computational speed of variational inference and the assurance of correctness compared to MCMC, which provides theoretical convergence guarantees.

- The speaker plans to address the remaining questions through detailed responses via email and will share a GitHub link for further reference.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.

![[04/Volkhov/08]](https://images.unsplash.com/photo-1524721696987-b9527df9e512?ixid=M3w0MjA4MDF8MHwxfHNlYXJjaHwyfHxkYXRhJTIwc3RydWN0dXJlJTIwYWJzdHJhY3QlMjBkYXRhJTIwdHlwZSUyMHN0YWNrcyUyMGNvbXB1dGVyJTIwc2NpZW5jZXxlbnwxfDB8fHwxNzAzODY5Mzg1fDA&ixlib=rb-4.0.3&w=300&fit=crop&h=200&q=75&fm=webp)