Podcast

Questions and Answers

What is the primary purpose of a Decision Tree in machine learning?

What is the primary purpose of a Decision Tree in machine learning?

- To perform unsupervised learning tasks

- To analyze large datasets without any structure

- To implement clustering algorithms

- To solve classification problems (correct)

Which node in a Decision Tree is responsible for making decisions?

Which node in a Decision Tree is responsible for making decisions?

- Root Node

- Leaf Node

- Output Node

- Decision Node (correct)

Which of the following accurately describes the structure of a Decision Tree?

Which of the following accurately describes the structure of a Decision Tree?

- It is a circular graph that represents decision processes

- It uses nodes exclusively for numerical data representation

- It has a tree-like structure with branches representing decision rules (correct)

- It consists only of linear paths from the root to the leaves

What algorithm is commonly used to build a Decision Tree?

What algorithm is commonly used to build a Decision Tree?

Which of the following is NOT a characteristic of the Decision Tree?

Which of the following is NOT a characteristic of the Decision Tree?

Flashcards

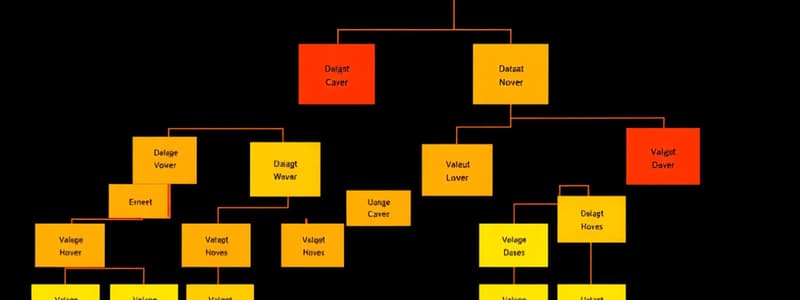

Decision Tree Algorithm

Decision Tree Algorithm

A supervised learning algorithm that creates a tree-like model to categorize data. It features decision nodes for branching decisions and leaf nodes representing the outcome.

Root Node

Root Node

The starting point of the decision tree. It represents the initial decision to be made.

Decision Node

Decision Node

Internal nodes in a decision tree that represent a test or question based on a data feature. They have multiple branches that lead to further decisions.

Leaf Node

Leaf Node

Signup and view all the flashcards

CART (Classification and Regression Tree) Algorithm

CART (Classification and Regression Tree) Algorithm

Signup and view all the flashcards

Study Notes

Decision Tree Classification Algorithm

- Decision trees are supervised learning techniques used for both classification and regression, although more often used for classification.

- They're tree-structured classifiers, with internal nodes representing features, branches representing decision rules, and leaf nodes representing outcomes.

- Decision nodes are used to make decisions and have multiple branches, while leaf nodes output decisions and don't have further branches.

- Decision trees are graphical representations of decision-making processes based on given data features, providing possible solutions to problems.

- They resemble tree structure, starting with a root node then expanding into branches and creating a tree-like structure.

- Decision trees use the CART (Classification and Regression Tree) algorithm to build the tree structure.

- Decision trees make decisions by asking a question based on the answer (yes/no) and further splitting the tree into subtrees.

Decision Tree Terminologies

- Root Node: The starting point of a decision tree, representing the entire dataset.

- Leaf Node: The final output nodes, where the tree cannot be further divided.

- Splitting: The process of dividing a decision node into sub-nodes based on conditions.

- Branches/Sub Tree: The tree formed after a branch is split.

- Pruning: Removing unnecessary branches from the tree in order to refine it.

- Parent/Child Node: The root node is the parent, and other nodes are the child nodes.

How Decision Tree Algorithm Works

- The algorithm begins at the root node (which contains the entire dataset).

- It identifies the best attribute using an Attribute Selection Measure (ASM).

- Splits the dataset based on possible values of the best attribute.

- Creates a decision tree node that includes the best attribute.

- Recursively applies this process to the subsets, creating further nodes and branches until no further classification is possible.

Attribute Selection Measures

- Information Gain: Measures the reduction in entropy after a dataset is divided by a specific attribute. It aims to maximize the information gain at each step.

- Gini Index: Measures the impurity of the dataset, with lower values indicating better purity. CART algorithm uses this to create binary splits. Minimizing the Gini index is the goal.

Pruning

- Pruning is a process of removing extra nodes/branches to optimize a decision tree, to lessen overfitting issues.

- Two prominent pruning types are Cost Complexity Pruning and Reduced Error Pruning.

Advantages of Decision Trees

- Easy to understand

- Useful for decision-related problems

- Demonstrates a tree-like structure that is easy to follow.

- Reduced data cleaning requirements compared to other algorithms

Disadvantages of Decision Trees

- Can be complex due to multiple layers.

- Prone to overfitting problems (solved with techniques like Random Forest)

- Computational complexity may increase with more class labels.

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.