Podcast

Questions and Answers

What is the possible solution mentioned to address sensitivity to small shifts and color-based appearance variations in feature representations?

What is the possible solution mentioned to address sensitivity to small shifts and color-based appearance variations in feature representations?

edges and intensity oriented gradients

What is Intersection over Union used to evaluate in object detection algorithms?

What is Intersection over Union used to evaluate in object detection algorithms?

- The area of union between pixels

- Object orientation

- The overlap between two bounding boxes (correct)

- Pixel intensity variations

Deterministic tracking methods are only suitable for offline usage.

Deterministic tracking methods are only suitable for offline usage.

True (A)

____ filtering algorithms estimate posterior belief and state distribution based on control data and sensor measurements.

____ filtering algorithms estimate posterior belief and state distribution based on control data and sensor measurements.

Match the object tracking algorithm components with their descriptions:

Match the object tracking algorithm components with their descriptions:

What is the main goal of Reinforcement Learning?

What is the main goal of Reinforcement Learning?

What is the key advantage of Supervised Learning?

What is the key advantage of Supervised Learning?

Unsupervised Learning involves learning from labelled examples.

Unsupervised Learning involves learning from labelled examples.

Semantic segmentation involves dividing an image into multiple segments and assigning each pixel a ____________.

Semantic segmentation involves dividing an image into multiple segments and assigning each pixel a ____________.

Match the Machine Learning paradigm with its description:

Match the Machine Learning paradigm with its description:

What does DeepSORT use to improve the ability to track objects in the presence of occlusion?

What does DeepSORT use to improve the ability to track objects in the presence of occlusion?

What is the fundamental matrix used for in epipolar geometry?

What is the fundamental matrix used for in epipolar geometry?

Stereo cameras can deliver scaled scenes because they have two lenses.

Stereo cameras can deliver scaled scenes because they have two lenses.

The apparent motion of objects between two stereo images is represented by a ________ map.

The apparent motion of objects between two stereo images is represented by a ________ map.

Match the following matching techniques with their descriptions:

Match the following matching techniques with their descriptions:

What is the purpose of adjusting the images to make epipolar lines horizontal?

What is the purpose of adjusting the images to make epipolar lines horizontal?

What are some cues that humans use to estimate depth from a single image?

What are some cues that humans use to estimate depth from a single image?

What is an important element of traditional methods for estimating depth from a single image?

What is an important element of traditional methods for estimating depth from a single image?

The first successful method to determine absolute depth involved learning 3 sets of parameters per row of the image, including estimating absolute depth of a patch, uncertainty estimation, and a smoothing term in the _____.

The first successful method to determine absolute depth involved learning 3 sets of parameters per row of the image, including estimating absolute depth of a patch, uncertainty estimation, and a smoothing term in the _____.

Using superpixels to calculate depth involves determining the depth of all pixels belonging to a surface based on their 2D coordinates.

Using superpixels to calculate depth involves determining the depth of all pixels belonging to a surface based on their 2D coordinates.

Match the following approaches with their method of solving the depth estimation problem:

Match the following approaches with their method of solving the depth estimation problem:

What are some limitations of estimating depth from a single image using traditional methods?

What are some limitations of estimating depth from a single image using traditional methods?

Flashcards are hidden until you start studying

Study Notes

Machine Learning Paradigms

- Supervised Learning:

- Learning from examples with labeled data

- Examples: email classification, image classification, speech recognition, language translation, convolutional networks for scene understanding

- Function approximation: deduce the equation from the data

- Advantages: abundant labeled data available, high accuracy

- Unsupervised Learning:

- Learning patterns and structures without labeled data

- Examples: clustering, dimensionality reduction, anomaly detection

- Goal: learn hidden representation of data

- Evaluation metrics: silhouette score, Davies-Bouldin index, reconstruction error

- Semi-supervised Learning:

- Learning from limited labeled data and abundant unlabeled data

- Challenges: cost and time requirements, labeling bias, dynamic nature of data

- Techniques: pseudo-labeling, consistency regularization, self-training

- Applications: label-scarce applications, medical image segmentation

- Self-supervised Learning:

- Learning from intrinsic signals in the data

- Generating supervisory signals from input data

- Pretext tasks: predicting one part of the data from another

- Techniques: generative, contrastive, adversarial

- Applications: depth estimation, 3D reconstruction

- Reinforcement Learning:

- Learning without examples, through interaction with the environment

- Optimization of a reward signal

- Goal: maximize future reward

- DRL algorithms: value-based, policy-based, actor-critic

Semantic Segmentation

- Definition: dividing an image into multiple segments or regions and assigning each pixel a label corresponding to the semantic class it belongs to

- Applications: autonomous robots, medical imaging, satellite image analysis, surveillance systems

- Traditional Methods: low-level features, thresholding, edge detection, region growing, clustering

- Convolutional Neural Networks (CNNs):

- Extract hierarchical features

- Encoder: extracts high-level features using convolutional and pooling layers

- Decoder: reconstructs spatial dimensions using upsampling techniques

- Predicts labels in real-time

- Evaluation Metrics: pixel accuracy, mean pixel accuracy, precision, recall, F-score, mean intersection over union, frequency weighted intersection over union, dice coefficient

- Loss Functions: cross-entropy loss, dice loss, weighted loss functions, combination loss function, binary cross-entropy loss

Object Detection

- Definition: detecting objects of certain classes and locating them using bounding boxes

- Challenges: illumination, object pose, clutter, occlusion, intra-class appearance, viewpoint

- General Workflow:

- Specify an object model

- Feature representations

- Generate hypotheses

- Score hypotheses

- Resolve detections

- Non-max Suppression: discarding bounding boxes with a confidence score below a certain threshold

- 2-Stage Framework: region proposal and classification stages

- 1-Stage Detectors: single feed-forward CNN that directly outputs bounding boxes and classification

Object Tracking

- Definition: following an object in a sequence of images

- Problem Statement: estimate target state (position) over time

- Challenges: geometric change, illumination, occlusions, image quality, similar objects, non-linear motion

- Main Components: detection and loss detection, estimation, models

- Object Representation: point tracking, appearance tracking, silhouette tracking

- Motion Estimation: deterministic, probabilistic

- Deterministic Methods: quick convergence, efficient, but can get stuck in a local max or min

- Probabilistic Methods: can be used in real-time, consider history, entire probability densities, multi-model### Object Tracking and Modelling

- Control inputs: change of dynamic system

- Models: Object (Temporal dynamics), Sensors (characteristics), Context (background modelling)

- Inference: Bayesian inference, estimate state x given measurement data z, control data u, respective state transition and measurement probabilities

- Belief: estimate of true state

Recursive Bayes Filter Algorithm

- Prediction is corrected at each frame and model updated

- Prediction/Time update: Calculate prior belief on dynamic model

- Correction/Measurement update: Calculate posterior belief based on measurement model; update belief based on new measurement and incorporate measurement using Bayes theorem

Kalman Filters

- Regular Kalman filter: used for linear and regular dynamic models (e.g. airplanes)

- Extended Kalman filter: used for non-linear models

Appearance Modelling

- Appearance of object needs to be modelled in a way that it is detectable in different frames

- Representation needs to be flexible enough to cope with different scales, poses, illuminations and occlusions

Object Approximation

- Segmentation

- Polygonal approximation

- Bounding ellipse/box

- Position only

- Goal: measure affinity in different frames

Appearance Tracking

- Lukas Kanade tracker: minimizes sum of squared differences, uses gradient descent, assigns different weights to pixels

- Mean Shift: builds from spatial templates, colour histogram, aims to maximize coefficient by assessing mean shift

- Correlation filter: better computational speed, uses Fast Fourier transform to determine position of template patch, optimizes a distance metric

- Template of the target object is compared to different regions to find best match

- High response for target and low response for background

Feature Representation

- Features are extracted from image window

-

- Learning phase: learning how to recognize object, discriminative features are needed

-

- Detection phase: given object description, algorithm searches for same object in scene captured by following frames

-

- Update: descriptor is updated using most recent frame

- CNN can be used to extract features

DeepSORT

- Uses CNN to improve ability to track objects in the presence of occlusion

- Evolution of SORT: uses first Kalman filter in image space and then frame by frame data association using the Hungarian method

- DeepSORT replaces association metric with a more informed metric that combines motion and appearance information

- Limited number of features

- Hungarian algorithm: matching based on cost matrix that considers Mahalanobis distance for motion consistency and cosine distance for appearance similarity

Structure from Motion Problem

- Figuring out how the camera moved through space and creating 3D structure of scene

- Visual odometry: estimating the position of a camera by looking at changes that are caused by motion

- Looking at different features and tracking them

3D Stereo Reconstruction

- Must be done in real-time

- Stereo cameras: can deliver scaled scenes, have two lenses which allows them to mimic the human eye by taking two slightly different pictures

- Mono camera: no scaled scenes

- Why is stereo reconstruction needed?

- Avoiding collision of robot with objects

- Better understand semantics of scene

- 3D mapping of environment

Pinhole Camera Model

- Mathematical relationship between 3D point and projection into image plane

- When you make a very small hole in a box, what is in the outside world will be projected into the back of the box

Epipolar Geometry

- Epipolar plane: center of two cameras plus a point in the real world

- Conjugate points: points in image plane the real-life point projects to in each camera

- Epipoles: projection of one camera into the other; all epipolar lines go through these points

- Epipolar lines: point in one image is a line in the other (cause it is seen from different perspectives)

- Epipolar plane is defined by P1, e1, and center of camera

- Epipolar constraint: a point in one image must lay on a line in the other image

Fundamental Matrix

- Described epipolar geometry of two views (3x3 matrix)

- Epipolar plane is mathematically described using the fundamental matrix

Homogenous Coordinates

- A point in n-dimensional space is represented by n + 1 coordinates

- Through triangulations, points in epipolar plane can be found

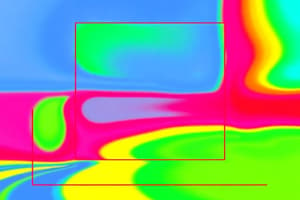

Disparity Map

- Apparent motion of objects between two stereo images

- Depth is given by disparity

- 2D image where each pixel represents a disparity between corresponding points of the two images of a stereo pair

Image Matching

- Image matching algorithms identify and match different features such as edges, points, patterns of two or more images

- Correspondence of same point in image space allows to determine points position in object space

- Feature matching: matching different features; used in image orientation

- Area-based matching: used in dense point cloud generation

Feature Matching

- Matching is mostly just done at salient points (corners, blobs,…)

- Identifying a corner: based on gradients (directional change in the intensity of the image)

- Identifying blobs: regions with positive or negative color value compared to neighborhood

Area-Based Matching

- Cross-correlation: determine position in search image with a high similarity with a reference pattern

- Repeated for all pixels to get dense reconstruction

- Problem with correlation: max correlation does not necessarily be the correct match cause of noise, occlusions

- Solution: also consider neighboring points

Deep Learning and 3D Reconstructions

- Replacing different parts with neural networks, such as cross-correlation with a CNN

- Deep feature mapping: 2 image patches that are converted to vectors to be compared

- Similarity can be defined by traditional method (feature mapping) or a fully connected decision network

- Variants developed that can consider global context without increasing computational loss

- Sparse reconstruction: every correctly matched points lead to a single point in 3D

Studying That Suits You

Use AI to generate personalized quizzes and flashcards to suit your learning preferences.